Grid5000:Home: Difference between revisions

No edit summary |

No edit summary |

||

| (165 intermediate revisions by 13 users not shown) | |||

| Line 1: | Line 1: | ||

__NOTOC__ __NOEDITSECTION__ | __NOTOC__ __NOEDITSECTION__ | ||

{|width="95%" | {|width="95%" | ||

|- | |- valign="top" | ||

| width=" | |bgcolor="#888888" style="border:1px solid #cccccc;padding:2em;padding-top:1em;"| | ||

[[Image: | [[File:Slices-ri-white-color.png|260px|left|link=https://www.slices-ri.eu]] | ||

<b>Grid'5000 is a precursor infrastructure of [https://www.slices-ri.eu SLICES-RI], Scientific Large Scale Infrastructure for Computing/Communication Experimental Studies.</b> | |||

<br/> | |||

Content on this website is partly outdated. Technical information remains relevant. | |||

|} | |||

{|width="95%" | |||

|- valign="top" | |||

|bgcolor="#f5fff5" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | |||

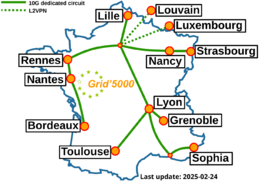

[[Image:g5k-backbone.png|thumbnail|260px|right|Grid'5000|link=https://www.grid5000.fr]] | |||

'''Grid'5000 is a large-scale and flexible testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing, including Cloud, HPC, Big Data and AI.''' | |||

Key features: | |||

* provides '''access to a large amount of resources''': 15000 cores, 800 compute-nodes grouped in homogeneous clusters, and featuring various technologies: PMEM, GPU, SSD, NVMe, 10G and 25G Ethernet, Infiniband, Omni-Path | |||

* '''highly reconfigurable and controllable''': researchers can experiment with a fully customized software stack thanks to bare-metal deployment features, and can isolate their experiment at the networking layer | |||

* '''advanced monitoring and measurement features for traces collection of networking and power consumption''', providing a deep understanding of experiments | |||

* '''designed to support Open Science and reproducible research''', with full traceability of infrastructure and software changes on the testbed | |||

* '''a vibrant community''' of 500+ users supported by a solid technical team | |||

<br> | |||

Read more about our [[Team|teams]], our [[Publications|publications]], and the [[Grid5000:UsagePolicy|usage policy]] of the testbed. Then [[Grid5000:Get_an_account|get an account]], and learn how to use the testbed with our [[Getting_Started|Getting Started tutorial]] and the rest of our [[:Category:Portal:User|Users portal]]. | |||

' | <br> | ||

Published documents and presentations: | |||

* [[Media:Grid5000.pdf|Presentation of Grid'5000]] (April 2019) | |||

* [https://www.grid5000.fr/mediawiki/images/Grid5000_science-advisory-board_report_2018.pdf Report from the Grid'5000 Science Advisory Board (2018)] | |||

Older documents: | |||

* [https://www.grid5000.fr/slides/2014-09-24-Cluster2014-KeynoteFD-v2.pdf Slides from Frederic Desprez's keynote at IEEE CLUSTER 2014] | |||

* [https://www.grid5000.fr/ScientificCommittee/SAB%20report%20final%20short.pdf Report from the Grid'5000 Science Advisory Board (2014)] | |||

<br> | |||

Grid'5000 is supported by a scientific interest group (GIS) hosted by Inria and including CNRS, RENATER and several Universities as well as other organizations. Inria has been supporting Grid'5000 through ADT ALADDIN-G5K (2007-2013), ADT LAPLACE (2014-2016), and IPL [[Hemera|HEMERA]] (2010-2014). | |||

|} | |} | ||

{| | <br> | ||

{{#status:0|0|0|http://bugzilla.grid5000.fr/status/upcoming.json}} | |||

<br> | |||

== Random pick of publications == | |||

{{#publications:}} | |||

==Latest news== | ==Latest news== | ||

<rss max=4 item-max-length="2000">https://www.grid5000.fr/rss/G5KNews.php</rss> | |||

---- | ---- | ||

[[ | [[News|Read more news]] | ||

=== Grid'5000 sites=== | |||

= | {|width="100%" cellspacing="3" | ||

{|width=" | |||

|- valign="top" | |- valign="top" | ||

|width="33%" bgcolor="#f5f5f5" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | |width="33%" bgcolor="#f5f5f5" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | ||

* [[Grenoble:Home|Grenoble]] | * [[Grenoble:Home|Grenoble]] | ||

* [[Lille:Home|Lille]] | * [[Lille:Home|Lille]] | ||

* [[Luxembourg:Home|Luxembourg]] | |||

* [[Louvain:Home|Louvain]] | |||

|width="33%" bgcolor="#f5f5f5" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | |width="33%" bgcolor="#f5f5f5" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | ||

* [[Lyon:Home|Lyon]] | * [[Lyon:Home|Lyon]] | ||

* [[Nancy:Home|Nancy]] | * [[Nancy:Home|Nancy]] | ||

* [[ | * [[Nantes:Home|Nantes]] | ||

* [[Rennes:Home|Rennes]] | |||

|width="33%" bgcolor="#f5f5f5" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | |width="33%" bgcolor="#f5f5f5" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | ||

* [[Sophia:Home|Sophia-Antipolis]] | * [[Sophia:Home|Sophia-Antipolis]] | ||

* [[Strasbourg:Home|Strasbourg]] | |||

* [[Toulouse:Home|Toulouse]] | * [[Toulouse:Home|Toulouse]] | ||

|- | |- | ||

|} | |} | ||

== Current funding == | |||

== | |||

{|width="100%" cellspacing="3" | {|width="100%" cellspacing="3" | ||

|- | |- | ||

| width="50%" bgcolor="#f5f5f5" valign="top" align="center" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | | width="50%" bgcolor="#f5f5f5" valign="top" align="center" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | ||

===INRIA=== | ===INRIA=== | ||

[[Image: | [[Image:Logo_INRIA.gif|300px|link=https://www.inria.fr]] | ||

| width="50%" bgcolor="#f5f5f5" valign="top" align="center" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | | width="50%" bgcolor="#f5f5f5" valign="top" align="center" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | ||

===CNRS=== | ===CNRS=== | ||

[[Image: | [[Image:CNRS-filaire-Quadri.png|125px|link=https://www.cnrs.fr]] | ||

|- | |- | ||

| width="50%" bgcolor="#f5f5f5" valign="top" align="center" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | | width="50%" bgcolor="#f5f5f5" valign="top" align="center" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | ||

===Universities=== | ===Universities=== | ||

IMT Atlantique<br/> | |||

Université Grenoble Alpes, Grenoble INP<br/> | |||

Université Rennes 1, Rennes<br/> | |||

Institut National Polytechnique de Toulouse / INSA / FERIA / Université Paul Sabatier, Toulouse<br/> | Institut National Polytechnique de Toulouse / INSA / FERIA / Université Paul Sabatier, Toulouse<br/> | ||

Université Bordeaux 1, Bordeaux<br/> | |||

Université Lille 1, Lille<br/> | |||

École Normale Supérieure, Lyon<br/> | |||

| width="50%" bgcolor="#f5f5f5" valign="top" align="center" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | | width="50%" bgcolor="#f5f5f5" valign="top" align="center" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | ||

===Regional councils=== | ===Regional councils=== | ||

Aquitaine<br/> | |||

Auvergne-Rhône-Alpes<br/> | |||

Bretagne<br/> | Bretagne<br/> | ||

Champagne-Ardenne<br/> | |||

Provence Alpes Côte d'Azur<br/> | Provence Alpes Côte d'Azur<br/> | ||

Hauts de France<br/> | |||

Lorraine<br/> | Lorraine<br/> | ||

|} | |} | ||

Latest revision as of 10:02, 11 July 2025

|

Grid'5000 is a precursor infrastructure of SLICES-RI, Scientific Large Scale Infrastructure for Computing/Communication Experimental Studies.

|

|

Grid'5000 is a large-scale and flexible testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing, including Cloud, HPC, Big Data and AI. Key features:

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2934 overall):

- Chih-Kai Huang, Guillaume Pierre. Aggregate Monitoring for Geo-Distributed Kubernetes Cluster Federations. IEEE Transactions on Cloud Computing, 2024, 12 (4), pp.1449-1462. 10.1109/TCC.2024.3482574. hal-04736577 view on HAL pdf

- Adrien Schoen, Gregory Blanc, Pierre-François Gimenez, Yufei Han, Frédéric Majorczyk, et al.. A tale of two methods: unveiling the limitations of GAN and the rise of bayesian networks for synthetic network traffic generation. 2024 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Jul 2024, Vienna, Austria. pp.273-286, 10.1109/EuroSPW61312.2024.00036. hal-04871298 view on HAL pdf

- Guillaume Rosinosky, Donatien Schmitz, Etienne Rivière. StreamBed: Capacity Planning for Stream Processing. DEBS 2024 - 18th ACM International Conference on Distributed and Event-based Systems, Jun 2024, Lyon, France. pp.90-102, 10.1145/3629104.3666034. hal-04708354 view on HAL pdf

- Samuel Pélissier, Abhishek Kumar Mishra, Mathieu Cunche, Vincent Roca, Didier Donsez. Efficiently linking LoRaWAN identifiers through multi-domain fingerprinting. Pervasive and Mobile Computing, 2025, 112, pp.102082. 10.1016/j.pmcj.2025.102082. hal-05120767 view on HAL pdf

- François Lemaire, Louis Roussel. Parameter Estimation using Integral Equations. Maple Transactions, 2024, Proceedings of the Maple Conference 2023, 4 (1), 10.5206/mt.v4i1.17126. hal-04560846v2 view on HAL pdf

Latest news

![]() Cluster Spirou is now in default queue at Louvain

Cluster Spirou is now in default queue at Louvain

We are pleased to announce that the Spirou[1] cluster of the newly installed Louvain site is now available in the default queue.

Spirou is a cluster composed of 8 Lenovo ThinkSystem SR630 V2 nodes, each featuring:

Be aware that we noticed I/Os inconsistencies on this cluster.

We advise users to take this into account when performing experimentations on the cluster. See the following bug for more information: https://intranet.grid5000.fr/bugzilla/show_bug.cgi?id=16938

This cluster was funded by the Fonds de la Recherche Scientifique – FNRS (F.R.S.–FNRS), and its operation is supported by F.R.S.–FNRS and the Wallonia region (SPW).

[1] https://www.grid5000.fr/w/Louvain:Hardware#spirou

Best regards,

Grid'5000 Technical Team

-- Grid'5000 Team 10:24, 12 January 2026 (CEST)

![]() End of support for centOS7/8 and centOSStream8 environments

End of support for centOS7/8 and centOSStream8 environments

Support for the centOS7/8 and centOSStream8 kadeploy environments is stopped due to the end of upstream support and compatibility issues with recent hardware.

The last version of the centOS7 environments (version 2024071117), centOS8 environments (version 2024071119), centOSStream8 environments (version 2024070316) will remain available on /grid5000. Older versions can still be accessed in the archive directory (see /grid5000/README.unmaintained-envs for more information).

-- Grid'5000 Team 08:44, 4 December 2025 (CEST)

![]() Ecotaxe cluster is now in default queue at Nantes

Ecotaxe cluster is now in default queue at Nantes

We are pleased to announce that the ecotaxe cluster of Nantes is now available in the default queue.

As a reminder, ecotaxe is a cluster composed of 2 HPE ProLiant DL385 Gen10 Plus v2 servers[1].

Each node features:

To submit a job on this cluster, the following command may be used:

oarsub -t exotic -p ecotaxe

This cluster is co-funded by Région Pays de la Loire, FEDER and REACT EU via the CPER SAMURAI [3].

[1] https://www.grid5000.fr/w/Nantes:Hardware#ecotaxe

[2] The observed throughput depends on multiple parameters such as the workload, the number of streams, ... [3] https://www.imt-atlantique.fr/fr/recherche-innovation/collaborer/projet/samurai

-- Grid'5000 Team 14:10, 02 December 2025 (CET)

![]() Some changes on the hardware configuration of Grenoble nodes

Some changes on the hardware configuration of Grenoble nodes

We recently did some hardware changes on clusters yeti, troll and dahu.

The changes are as follows:

- yeti-[1,3]: 1× NVMe

- yeti-[2,4]: 2× NVMe

oarsub request. For example:oarsub -I -p "dahu and opa_count > 0"

-- Grid'5000 Team 14:50, 24 November 2025 (CEST)

Grid'5000 sites

Current funding

INRIA |

CNRS |

UniversitiesIMT Atlantique |

Regional councilsAquitaine |