Grid5000:Home

|

Grid'5000 is a precursor infrastructure of SLICES-RI, Scientific Large Scale Infrastructure for Computing/Communication Experimental Studies.

|

|

Grid'5000 is a large-scale and flexible testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing, including Cloud, HPC, Big Data and AI. Key features:

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2786 overall):

- Emmanuel Medernach, Gaël Vila, Axel Bonnet, Sorina Camarasu-Pop. ReproVIP Report #2.1.1 Application Deployment Strategies. CREATIS Université Lyon 1. 2024. hal-04551771 view on HAL pdf

- Chuyuan Li, Maxime Amblard, Chloé Braud. A Semi-supervised Dialogue Discourse Parsing Pipeline. Journées Scientifiques du GDR Lift (LIFT 2023), Nov 2023, Nancy, France. hal-04356416 view on HAL pdf

- Matthieu Simonin, Anne-Cécile Orgerie. Méthodologies de calcul d'empreinte carbone sur une plateforme de calcul : exemple du site Grid'5000 de Rennes. JRES 2024 - Journées réseaux de l'enseignement et de la recherche, Renater, Dec 2024, Rennes, France. pp.1-14. hal-04893984 view on HAL pdf

- Antoine Omond, Hélène Coullon, Issam Raïs, Otto Anshus. Leveraging Relay Nodes to Deploy and Update Services in a CPS with Sleeping Nodes. CPSCom 2023: 16th IEEE International Conference on Cyber, Physical and Social Computing, Dec 2023, Danzhou, China. pp.1-8, 10.1109/iThings-GreenCom-CPSCom-SmartData-Cybermatics60724.2023.00102. hal-04372320 view on HAL pdf

- Mateusz Gienieczko, Filip Murlak, Charles Paperman. Supporting Descendants in SIMD-Accelerated JSONPath. International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS 2024), 2024, San Diego (California), United States. pp.338-361, 10.4230/LIPIcs. hal-04398350 view on HAL pdf

Latest news

The first SLICES-FR School is organized from July 7th to 11th in Lyon.

This free event, co-organized with the PEPR Cloud and Networks of the Future, brings together researchers, engineers and professionals to explore advances in distributed computing, edge computing, reprogrammable networks and the IoT.

-- Grid'5000 Team 09:30, 9 July 2025 (CEST)

![]() Cluster "vianden" is now in the default queue in Luxembourg

Cluster "vianden" is now in the default queue in Luxembourg

We are pleased to announce that the vianden[1] cluster of Luxembourg is now available in the default queue.

Vianden is a cluster of a single node with 8 MI300X AMD GPUs.

The node features:

The AMD MI300X GPUs are not supported by Grid'5000 default system (Debian 11). However, one can easily unlock full GPU functionality by deploying the ubuntu2404-rocm environment:

fluxembourg$ oarsub -t exotic -t deploy -p vianden -I

fluxembourg$ kadeploy3 -m vianden-1 ubuntu2404-rocm

More information in the Exotic page.

This cluster was funded by the University of Luxembourg.

[1] https://www.grid5000.fr/w/Luxembourg:Hardware#vianden

-- Grid'5000 Team 11:30, 27 June 2025 (CEST)

![]() Cluster "hydra" is now in the default queue in Lyon

Cluster "hydra" is now in the default queue in Lyon

We are pleased to announce that the hydra[1] cluster of Lyon is now available in the default queue.

As a reminder, Hydra is a cluster composed of 4 NVIDIA Grace-Hopper servers[2].

Each node features:

Due to its bleeding-edge hardware, the usual Grid'5000 environments are not supported by default for this cluster.

(Hydra requires system environments featuring a Linux kernel >= 6.6). The default system on the hydra nodes is based on Debian 11, but **does not provide functional GPUs**. However, users may deploy the ubuntugh2404-arm64-big environment, which is similar to the official Nvidia image provided for this machine and provides GPU support.

To submit a job on this cluster, the following command may be used:

oarsub -t exotic -p hydra

This cluster is funded by INRIA and by Laboratoire de l'Informatique du Parallélisme with ENS Lyon support.

[1] Hydra is the largest of the modern constellations according to Wikipedia: https://en.wikipedia.org/wiki/Hydra_(constellation)

[2] https://developer.nvidia.com/blog/nvidia-grace-hopper-superchip-architecture-in-depth/

-- Grid'5000 Team 16:42, 12 June 2025 (CEST)

![]() Cluster "estats" (Jetson nodes in Toulouse) is now kavlan capable

Cluster "estats" (Jetson nodes in Toulouse) is now kavlan capable

The network topology of the estats Jetson nodes can now be configured, just like for other clusters.

More info in the Network reconfiguration tutorial.

-- Grid'5000 Team 18:25, 21 May 2025 (CEST)

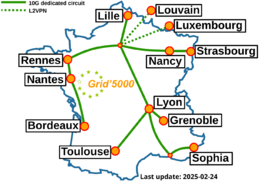

Grid'5000 sites

Current funding

INRIA |

CNRS |

UniversitiesIMT Atlantique |

Regional councilsAquitaine |