Getting Started: Difference between revisions

(Using nodes in the default environment) |

m (→Using nodes in the default environment: feedback from pierre) |

||

| Line 291: | Line 291: | ||

On the other hand, the <code class="file">/tmp/</code> directory is stored on a local disk of the node. Use this directory if you need to access data locally. | On the other hand, the <code class="file">/tmp/</code> directory is stored on a local disk of the node. Use this directory if you need to access data locally. | ||

{{Note|text=The home directory is only shared within a site. Two nodes from different sites will not have access to the same home.}} | |||

=== Example usage === | === Example usage === | ||

| Line 308: | Line 310: | ||

=== Becoming root with sudo-g5k === | === Becoming root with sudo-g5k === | ||

On HPC clusters, users typically don't have root access. However, Grid'5000 allows more flexibility: if you need to install additional system packages or customize the system, it is possible to become root. The tool to do this is called [[sudo-g5k]]. | On HPC clusters, users typically don't have root access. However, Grid'5000 allows more flexibility: if you need to install additional system packages or to customize the system, it is possible to become root. The tool to do this is called [[sudo-g5k]]. | ||

{{Note|text=Using [[sudo-g5k]] has a cost for the platform: at the end of your job, the node needs to be completely reinstalled so that it is clean for the next user. It is best to avoid running [[sudo-g5k]] in very short jobs.}} | {{Note|text=Using [[sudo-g5k]] has a cost for the platform: at the end of your job, the node needs to be completely reinstalled so that it is clean for the next user. It is best to avoid running [[sudo-g5k]] in very short jobs.}} | ||

| Line 334: | Line 336: | ||

{{Note|text=We use the full PCI path to the disk instead of the more usual <code class="file">/dev/sdb</code>. This is recommended because the <code class="file">sdX</code> aliases may not always point to the same disk, depending on disk initialization order during boot.}} | {{Note|text=We use the full PCI path to the disk instead of the more usual <code class="file">/dev/sdb</code>. This is recommended because the <code class="file">sdX</code> aliases may not always point to the same disk, depending on disk initialization order during boot.}} | ||

== Deploying your nodes to get root access and create your own experimental environment == | == Deploying your nodes to get root access and create your own experimental environment == | ||

Revision as of 14:01, 29 July 2021

| Note | |

|---|---|

This page is actively maintained by the Grid'5000 team. If you encounter problems, please report them (see the Support page). Additionally, as it is a wiki page, you are free to make minor corrections yourself if needed. If you would like to suggest a more fundamental change, please contact the Grid'5000 team. | |

This tutorial will guide you through your first steps on Grid'5000. Before proceeding, make sure you have a Grid'5000 account (if not, follow this procedure), and an SSH client.

Getting support

The Support page describes how to get help during your Grid'5000 usage.

There's also an FAQ page and a cheat sheet with the most common commands.

Connecting for the first time

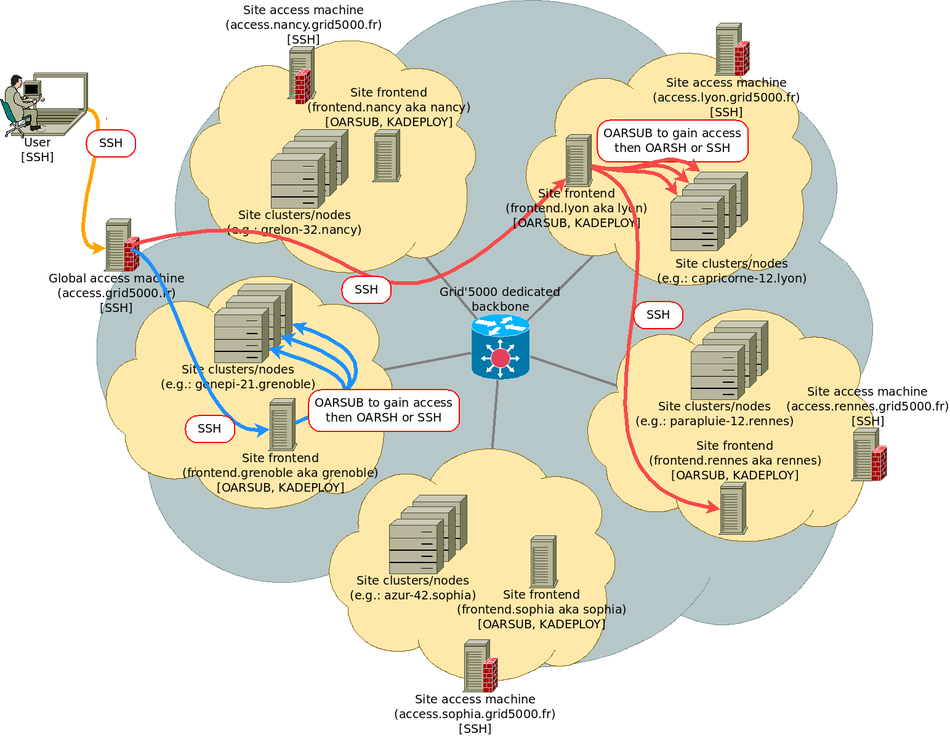

The primary way to move around Grid'5000 is using SSH. A reference page for SSH is also maintained with advanced configuration options that frequent users will find useful.

As described in the figure below, when using Grid'5000, you will typically:

- connect, using SSH, to an access machine

- connect from this access machine to a site frontend

- on this site frontend, reserve resources (nodes), and connect to those nodes

SSH connection through a web interface

If you want an out-of-the-box solution which does not require you to setup SSH, you can connect through a web interface. The interface is available at https://intranet.grid5000.fr/shell/SITE/. For example, to access nancy's site, use: https://intranet.grid5000.fr/shell/nancy/ To connect you will have to type in your credentials twice (first for the HTTP proxy, then for the SSH connection).

This solution is probably suitable to follow this tutorial, but is unlikely to be suitable for real Grid'5000 usage. So you should probably read the next sections about how to setup and use SSH at some point.

Connect to a Grid'5000 access machine

To enter the Grid'5000 network from Internet, one must use an access machine: access.grid5000.fr (Note that access.grid5000.fr is a round robin alias to either: access-north which is currently hosted in Lille, or access-south currently hosted in Sophia-Antipolis).

For all connections, you must use the login that was provided to you when you created your Grid'5000 account.

You will get authenticated using the SSH public key you provided in the account creation form. Password authentication is disabled.

| Note | |

|---|---|

You can modify your SSH keys in the account management interface | |

Connecting to a Grid'5000 site

Grid'5000 is structured in sites (Grenoble, Rennes, Nancy, ...). Each site hosts one or more clusters (homogeneous sets of machines, usually bought at the same time).

To connect to a particular site, do the following (blue and red arrow labeled SSH in the figure above).

- Home directories

You have a different home directory on each Grid'5000 site, so you will usually use Rsync or scp to move data around.

On access machines, you have direct access to each of those home directories, through NFS mounts (but using that feature to transfer very large volumes of data is inefficient). Typically, to copy a file to your home directory on the Nancy site, you can use:

Grid'5000 does NOT have a BACKUP service for users' home directories: it is your responsibility to save important data in someplace outside Grid'5000 (or at least to copy data to several Grid'5000 sites in order to increase redundancy).

Quotas are applied on home directories -- by default, you get 25 GB per Grid'5000 site. If your usage of Grid'5000 requires more disk space, it is possible to request quota extensions in the account management interface, or to use other storage solutions (see Storage).

Recommended tips and tricks for an efficient use of Grid'5000

- Better exploit SSH and related tools

There are also several recommended tips and tricks for SSH and related tools (more details in the SSH page).

- Configure SSH aliases using the ProxyCommand option. Using this, you can avoid the two-hops connection (access machine, then frontend) but establish connections directly to frontends. This requires using OpenSSH, which is the SSH software available on all GNU/Linux systems, MacOS, and also recent versions of Microsoft Windows.

Hostg5kUserloginHostname access.grid5000.fr ForwardAgent no Host*.g5kUserloginProxyCommand ssh g5k -W "$(basename %h .g5k):%p" ForwardAgent no

Reminder: login is your Grid'5000 username

Warning: the ProxyCommand line works if your login shell is bash. If not you may have to adapt it. For instance, for the fish shell, this line must be: ProxyCommand ssh g5k -W (basename %h .g5k):%p.

Once done, you can establish connections to any machine (first of all: frontends) inside Grid'5000 directly, by suffixing .g5k to its hostname (instead of first having to connect to an access machine). E.g.:

- Use

rsyncinstead ofscpfor better performance with multiple files. - Access your data from your laptop using SSHFS

- Edit files over SSH with your favorite text editor, with e.g.:

There are more in this talk from Grid'5000 School 2010, and this talk more focused on SSH.

- For a better bandwidth or latency, you may also be able to connect directly via the local access machine of one of the Grid'5000 sites.

Local accesses use access.site.grid5000.fr instead of access.grid5000.fr.

However, mind that per-site access restrictions are applied: see External access for details about local access machines.

- VPN

A VPN service is also available, allowing to connect directly to any Grid'5000 machines (bypassing the access machines). See the VPN page for more information.

- HTTP reverse proxies

If you only require HTTP/HTTPS access to a node, a reverse HTTP proxy is also available, see the HTTP/HTTPs_access page.

- Cheatsheet

The Grid'5000 cheat sheet provides a nice summary of everything described in the tutorials.

Discovering, visualizing and reserving Grid'5000 resources

At this point, you should be connected to a site frontend, as indicated by your shell prompt (login@fsite:~$). This machine will be used to reserve and manipulate resources on this site, using the OAR software suite.

Discovering and visualizing resources

There are several ways to learn about the site's resources and their status:

- The site's MOTD (message of the day) lists all clusters and their features. Additionally, it gives the list of current or future downtimes due to maintenance, which is also available from https://www.grid5000.fr/status/.

- Site pages on the wiki (e.g. Nancy:Home) contain a detailed description of the site's hardware and network:

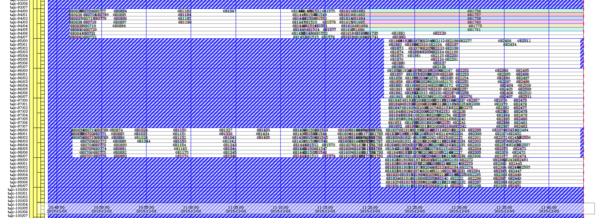

- The Status page links to the resource status on each site, with two different visualizations available:

- Monika, that provides the current status of nodes (see Nancy's current status)

- Gantt, that provides current and planned resources reservations (see Nancy's current status; example in the figure below).

- Hardware pages contain a detailed description of the site's hardware

Reserving resources with OAR: the basics

| Note | |

|---|---|

OAR is the resources and jobs management system (a.k.a batch manager) used in Grid'5000, just like in traditional HPC centers. However, settings and rules of OAR that are configured in Grid'5000 slightly differ from traditional batch manager setups in HPC centers, in order to match the requirements for an experimentation testbed. Please remember to read again Grid'5000 Usage Policy to understand the expected usage. | |

In Grid'5000 the smallest unit of resource managed by OAR is the core (cpu core), but by default a OAR job reserves a host (physical computer including all its cpus and cores, and possibly gpus). Hence, what OAR calls nodes are hosts (physical machines). In the oarsub resource request (-l arguments), nodes is an alias for host, so both are equivalent. But prefer using host for consistency with other argumnents and other tools that expose host not nodes.

| Note | |

|---|---|

Most of this tutorial uses the site of Nancy (with the frontend: | |

- Interactive usage

To reserve a single host (one node) for one hour, in interactive mode, do:

As soon as the resource becomes available, you will be directly connected to the reserved resource with an interactive shell, as indicated by the shell prompt, and you can run commands on the node:

- Reserving only part of a node

To reserve only one CPU core in interactive mode, run:

When reserving several CPU cores, there is no guarantee that they will be allocated on a single node. To ensure this, you need to specify that you want a single host:

- Non-interactive usage (scripts)

You can also simply launch your experiment along with your reservation:

Your program will be executed as soon as the requested resources are available. As this type of job is not interactive, you will have to check for its termination using the oarstat command.

- Other types of resources

To reserve only one GPU (with the associated CPU cores and share of memory) in interactive mode, run:

Or in Nancy where GPUs are only available in the production queue:

To reserve several GPUs and ensure they are located in a single node, make sure to specify host=1:

- Tips and tricks

To terminate your reservation and return to the frontend, simply exit this shell by typing exit or CTRL+d:

To avoid unanticipated termination of your jobs in case of errors (terminal closed by mistake, network disconnection), you can either use tools such as tmux or screen, or reserve and connect in 2 steps using the job id associated to your reservation. First, reserve a node, and run a sleep command that does nothing for an infinite time:

Of course, the job will not run for an infinite time: the command will be killed when the job expires.

Then:

grisou-42:

|

hostname && ps -ef | grep sleep

env | grep OAR # discover environment variables set by OAR |

- Choosing the job duration

Of course, you might want to run a job for a different duration than one hour. The -l option allows you to pass a comma-separated list of parameters specifying the needed resources for the job, and walltime is a special resource defining the duration of your job:

The walltime is the expected duration you envision to complete your work. Its format is [hour:min:sec|hour:min|hour]. For instance:

walltime=5=> 5 hourswalltime=1:22=> 1 hour and 22 minuteswalltime=0:03:30=> 3 minutes and 30 seconds

- Working with more than one node

You will probably want to use more than one node on a given site.

To reserve two hosts (two nodes), in interactive mode, do:

or equivalently (nodes is an alias for host):

You will obtain a shell on the first node of the reservation. It is up to you to connect to the other nodes and distribute work among them.

By default, you can only connect to nodes that are part of your reservation, and only using the oarsh connector to go from one node to the other. The connector supports the same options as the classical ssh command, so it can be used as a replacement for software expecting ssh.

| Note | |

|---|---|

To take advantage of several nodes and distribute work between them, a good option is GNU_Parallel. | |

oarsh is a wrapper around ssh that enables the tracking of user jobs inside compute nodes (for example, to enforce the correct sharing of resources when two different jobs share a compute node). If your application does not support choosing a different connector, it is possible to avoid using oarsh for ssh with the allow_classic_ssh job type, as in

Selecting specific resources

So far, all examples were letting OAR decide which resource to allocate to a job. It is possible to obtain finer-grained control on the allocated resources by using filters.

- Selecting nodes from a specific cluster or cluster type

- Reserve nodes from a specific cluster

- Reserve nodes in the production queue

- Reserve nodes from an exotic cluster type

Clusters with the exotic type either have a non-x86 architecture, or are specific enough to warrant this type. Resources with an exotic type are never selected by default by OAR. Using -t exotic is required to obtain such resources.

The type of a cluster can be identified on the Hardware pages, see for instance Lyon:Hardware.

| Warning | |

|---|---|

When using the | |

- Selecting specific nodes

If you know the exact node you want to reserve, you can specify the hostname of the node you require:

If you want several specific nodes, you can use a list:

fgrenoble:

|

oarsub -p "host IN ('dahu-5.grenoble.grid5000.fr', 'dahu-12.grenoble.grid5000.fr')" -l host=2,walltime=2 -I |

- Using OAR properties

The OAR nodes database contains a set of properties for each node, and the -p option actually filters based on these properties:

- Nodes with Infiniband FDR interfaces:

- Nodes with power sensors and GPUs:

- Nodes with 2 GPUs:

- Nodes with a specific CPU model:

- Since

-paccepts SQL, you can write advanced queries:

fnancy:

|

oarsub -p "wattmeter='YES' AND host NOT IN ('graffiti-41.nancy.grid5000.fr', 'graffiti-42.nancy.grid5000.fr')" -l host=5,walltime=2 -I |

The OAR properties available on each site are listed on the Monika pages linked from Status (example page for Nancy). The full list of OAR properties is available on this page.

| Note | |

|---|---|

Since this is using a SQL syntax, quoting is important! Use double quotes to enclose the whole query, and single quotes to write strings within the query. | |

Advanced job management topics

- Reservations in advance

By default, oarsub will give you resources as soon as possible: once submitted, your request enters a queue. This is good for non-interactive work (when you do not care when exactly it will be scheduled), or when you know that the resources are available immediately.

You can also reserve resources at a specific time in the future, typically to perform large reservations over nights and week-ends, with the -r parameter:

| Note | |

|---|---|

Remember that all your resource reservations must comply with the Usage Policy. You can verify your reservations' compliance with the Policy with | |

- Job management

To list jobs currently submitted, use the oarstat command (use -u option to see only your jobs). A job can be deleted with:

- Extending the duration of a reservation

Provided that the resources are still available after your job, you can extend its duration (walltime) using e.g.:

This will request to add one hour and a half to job 12345.

For more details, see the oarwalltime section of the Advanced OAR tutorial.

Using nodes in the default environment

When you run oarsub, you gain access to physical nodes with a default (standard) software environment. This is a Debian-based system that is regularly updated by the technical team. It contains many pre-installed software.

Home directory and /tmp

On each node, the home directory is a network filesystem (NFS): data in your home directory is not actually stored on the node itself, it is stored on a storage server managed by the Grid'5000 team. In particular, it means that all reserved nodes share the same home directory, and it is also shared with the site frontend. For example, you can compile or install software in your home (possibly using pip, virtualenv), and it will be usable on all your nodes.

On the other hand, the /tmp/ directory is stored on a local disk of the node. Use this directory if you need to access data locally.

| Note | |

|---|---|

The home directory is only shared within a site. Two nodes from different sites will not have access to the same home. | |

Example usage

The default environment is similar to what you could find on a typical HPC cluster. Here is an overview of possible usage:

- Use any software that is already installed in the default environment: Git, GCC, Python, Pip, Numpy, Ruby, Java...

- Run MPI programs, possibly taking advantage of several nodes

- Use GPUs with CUDA or AMD ROCm / HIP

- Install packages with Guix

- Run containers with Singularity

- Load additional scientific-related software with Modules

- Boot virtual machines with KVM

Of course, this list is not exhaustive.

Becoming root with sudo-g5k

On HPC clusters, users typically don't have root access. However, Grid'5000 allows more flexibility: if you need to install additional system packages or to customize the system, it is possible to become root. The tool to do this is called sudo-g5k.

| Note | |

|---|---|

Using sudo-g5k has a cost for the platform: at the end of your job, the node needs to be completely reinstalled so that it is clean for the next user. It is best to avoid running sudo-g5k in very short jobs. | |

Additional disks and storage

Some nodes have additional local disks, see Hardware#Storage for a list of available disks for each cluster. These disks are provided as-is, and it is the responsibility of the user to partition them and create a filesystem. Note that there may still be partitions present from a previous job.

Taking the dahu cluster as an example, you can partition the second 480 GB SSD interactively:

Create an ext4 filesystem on the first partition:

Mount it and check that it appears:

Besides local disks, more storage options are available.

Deploying your nodes to get root access and create your own experimental environment

Using oarsub gives you access to resources configured in their default (standard) environment, with a set of software selected by the Grid'5000 team. You can use such an environment to run Java or MPI programs, boot virtual machines with KVM, or access a collection of scientific-related software. However, you cannot deeply customize the software environment in a way or another.

Most Grid'5000 users use resources in a different, much more powerful way: they use Kadeploy to re-install the nodes with their software environment for the duration of their experiment, using Grid'5000 as a Hardware-as-a-Service Cloud. This enables them to use a different Debian version, another Linux distribution, or even Windows, and get root access to install the software stack they need.

| Note | |

|---|---|

There is a tool, called | |

Deploying nodes with Kadeploy

Reserve one node (the deploy job type is required to allow deployment with Kadeploy):

Start a deployment of the debian10-x64-base image on that node (this takes 5 to 10 minutes):

The -f parameter specifies a file containing the list of nodes to deploy. Alternatively, you can use -m to specify a node (such as -m gros-42.nancy.grid5000.fr). The -k parameter asks Kadeploy to copy your SSH key to the node's root account after deployment, so that you can connect without password. If you don't specify it, you will need to provide a password to connect. However, SSH is often configured to disallow root login using password. The root password for all Grid'5000-provided images is grid5000.

Reference images are named debian version-architecture-type. The debian version can be debian10 (Debian 10 "Buster", released in 07/2019) or debian9 (Debian 9 "stretch", released in 06/2017). The architecture is x64 (in the past, 32-bit images were also provided). The type can be:

min= a minimalistic image (standard Debian installation) with minimal Grid'5000-specific customization (the default configuration provided by Debian is used): addition of an SSH server, network interface firmware, etc (see changes).

base=min+ various Grid'5000-specific tuning for performance (TCP buffers for 10 GbE, etc.), and a handful of commonly-needed tools to make the image more user-friendly (see changes). Those could incur an experimental bias.

xen=base+ Xen hypervisor Dom0 + minimal DomU (see changes).

nfs=base+ support for mounting your NFS home and accessing other storage services (Ceph), and using your Grid'5000 user account on deployed nodes (LDAP) (see changes).

big=nfs+ packages for development, system tools, editors, shells (see changes).

And for the standard environment:

std=big+ integration with OAR. Currently, it is thedebian10-x64-stdenvironment which is used on the nodes if you or another user did not "kadeploy" another environment (see changes).

As a result, the environments you are the most likely to use are debian10-x64-min, debian10-x64-base, debian10-x64-xen, debian10-x64-nfs, debian10-x64-big, and their debian9 counterparts.

Environments are also provided and supported for some other distributions, only in the min variant:

- Ubuntu:

ubuntu1804-x64-minandubuntu2004-x64-min - Centos:

centos7-x64-minandcentos8-x64-min

Last, an environment for the upcoming Debian version (also known as Debian testing) is provided: debiantesting-x64-min (only min as well).

The list of all provided environments is available using kaenv3 -l. Note that environments are versioned, and old versions of reference environments are available in /grid5000/images/ on each frontend (as well as images that are no longer supported, such as CentOS 6 images). This can be used to reproduce experiments even months or years later, still using the same software environment.

Customizing nodes and accessing the Internet

Now that your nodes are deployed, the next step is usually to copy data (usually using scp or rsync) and install software.

First, connect to the node as root:

You can access websites outside Grid'5000 : for example, to fetch the Linux kernel sources:

| Warning | |

|---|---|

Please note that, for legal reasons, your Internet activity from Grid'5000 is logged and monitored. | |

Let's install stress (a simple load generator) on the node from Debian's APT repositories:

Installing all the software needed for your experiment can be quite time-consuming. There are three approaches to avoid spending time at the beginning of each of your Grid'5000 sessions:

- Always deploy one of the reference environments, and automate the installation of your software environment after the image has been deployed. You can use a simple bash script, or more advanced tools for configuration management such as Ansible, Puppet or Chef.

- Register a new environment with your modifications, using the

tgz-g5ktool. More details are provided in the Advanced Kadeploy tutorial. - Use a tool to generate your environment image from a set of rules, such as Kameleon or Puppet. The Grid'5000 technical team uses those two tools to generates all Grid'5000 environments in a clean and reproducible process

All those approaches have different pros and cons. We recommend that you start by scripting software installation after deploying a reference environment, and that you move to other approaches when this proves too limited.

Checking nodes' changes over time

The Grid'5000 team puts on strong focus on ensuring that nodes meet their advertised capabilities. A detailed description of each node is stored in the Reference API, and the node is frequently checked against this description in order to detect hardware failures or misconfigurations.

To see the description of grisou-1.nancy.grid5000.fr, use:

Cleaning up after your reservation

At the end of your resources reservation, the infrastructure will automatically reboot the nodes to put them back in the default (standard) environment. There's no action needed on your side.

Going further

In this tutorial, you learned the basics of Grid'5000:

- The general structure of Grid'5000, and how to move between sites

- How to manage you data (one NFS server per site; remember: it is not backed up)

- How to find and reserve resources using OAR and the

oarsubcommand - How to get root access on nodes using Kadeploy and the

kadeploy3command

You should now be ready to use Grid'5000.

Additional tutorials

There are many more tutorials available on the Users Home page. Please have a look at the page to continue learning how to use Grid'5000.