GNU Parallel

| Note | |

|---|---|

This page is actively maintained by the Grid'5000 team. If you encounter problems, please report them (see the Support page). Additionally, as it is a wiki page, you are free to make minor corrections yourself if needed. If you would like to suggest a more fundamental change, please contact the Grid'5000 team. | |

This page descibes the use of GNU Parallel on Grid'5000.

Quoting GNU Parallel website:

GNU parallel is a shell tool for executing jobs in parallel using one or more computers. A job can be a single command or a small script that has to be run for each of the lines in the input. The typical input is a list of files, a list of hosts, a list of users, a list of URLs, or a list of tables. A job can also be a command that reads from a pipe. GNU parallel can then split the input and pipe it into commands in parallel.

For a more general and complete information, see the GNU Parallel website.

We details in this page Grid'5000 specific information in order to let you take benefit from the tool on the platform.

About the GNU Parallel version installed in Grid'5000

The version of GNU Parallel installed on Grid'5000 nodes comes from the Debian's official packaging.

It is a rather old version, but it seems sufficient.

Whenever one would need a more recent version, one can get the tarball provided at http://ftp.gnu.org/gnu/parallel/parallel-latest.tar.bz2, and install it in one's home directoy. This is straightforward (e.g. ./configure --prefix=$HOME/parallel && make install).

(An environment module could be provided if requested by some users.)

Benefit from using GNU Parallel in Grid5000

While OAR is the Resource and Job Management System of Grid'5000 and supports the management of batch of jobs, the use OAR with its job container feature may be overkill to handle SPMD parallel executions of small tasks within a larger reservation.

In concrete terms, a user may create a OAR job to book a large set of resources for some time (e.g. for the night), and then submit a batch of many small tasks (e.g. each using only one core) within the first job. For that purpose, using a OAR container for the first job, then OAR inner jobs for the small tasks is overkill. Indeed, inner jobs have to pay the price of operations such as running prologues and epilogues, which are necessary to provide a healthy environment between jobs of possibly different users, but that are useless in that case because all jobs are of the same user. In this context, GNU Parallel is more relevant and faster than OAR.

(But please note that using OAR container and inner jobs makes sense when all jobs are not from the same user, for tutorials or teaching labs for instance.)

We strongly advise using GNU parallel to handle the execution of the small tasks within the initial OAR reservation of resources. That means creating only one OAR job to book the large set of resources (not using the container job type), then within this job, use GNU Parallel.

Note that using GNU Parallel to handle the small tasks, some OAR restrictions (e.g. max 200 jobs in queue) will not apply.

How to use GNU Parallel in Grid'5000

GNU Parallel must be used within a OAR job: GNU Parallel does not book resources, it just manages the concurrent/parallel executions of tasks on already reserved resources.

Single node

Within a OAR job of only 1 node (host), there is nothing specific to Grid'5000 to know in the usage of GNU parallel, in order to exploit all the cores of the node. Just run the parallel command in the job shell. See GNU parallel documentation, or manual page for more information.

Multiple nodes

Within a OAR job of many nodes, the user needs to tell GNU Parallel how to remotely execute the tasks on the nodes reserved in the OAR job, from the head node (where the parallel command is to be run).

- He has to provide the list of target nodes to execute on, passing it via the GNU Parallel --slf option. It is possible to use the OAR nodes file $OAR_NODEFILE. (Note that this file contains as many lines with a node name as the count of cores of that node. Having these duplicates is however not an issue for GNU Parallel, as it pre-process this file to have only unique entries.)

- He has to use the oarsh connector (only if your job did not reserve all the CPU cores of a node), by passing it to the GNU Parallel --ssh option.

Confining GNU Parallel tasks to GPU, CPU, or cores

OAR allows to run jobs at a finer grain than a host: that is confining the job execution to CPU, GPU or cores. This execution isolation can also be obtained when combining OAR with GNU Parallel.

After reserving with oarsub a whole set of resources at the host grain (say, N hosts), GNU Parallel executors can be confined to subsets of the obtained OAR job resources, such as having for instance a few cores only or only 1 GPU available per GNU Parallel task.

To do so, GNU Parallel executors (sshlogin) must define their isolation context (which CPU, GPU, cores to use), using oarsh with the OAR_USER_CPUSET and/or OAR_USER_GPUDEVICE environment set. The oarprint command provides what is necessary for that.

- Confining to GPUs

For instance, let's say one want to have one GNU Parallel executor per GPU on 2 bi-GPU machines. First, one reserves a job, say one gets chifflet-3 and chifflet-7. One then creates thanks to oarprint a GNU Parallel sshlogin file, which defines the executors as follows:

chifflet-3:

|

oarprint gpu -P gpudevice,cpuset,host -C+ -F "1/OAR_USER_GPUDEVICE=% OAR_USER_CPUSET=% oarsh %" | tee gpu-executors |

1/OAR_USER_GPUDEVICE=0 OAR_USER_CPUSET=20+18+14+12+16+0+6+26+24+22+10+4+2+8 oarsh chifflet-3.lille.grid5000.fr 1/OAR_USER_GPUDEVICE=0 OAR_USER_CPUSET=20+18+12+14+16+0+26+24+22+6+10+4+8+2 oarsh chifflet-7.lille.grid5000.fr 1/OAR_USER_GPUDEVICE=1 OAR_USER_CPUSET=25+13+27+11+9+15+23+19+1+21+17+5+7+3 oarsh chifflet-3.lille.grid5000.fr 1/OAR_USER_GPUDEVICE=1 OAR_USER_CPUSET=13+25+27+11+23+19+15+9+1+21+17+7+5+3 oarsh chifflet-7.lille.grid5000.fr

Each line of the gpu-executors file instructs GNU parallel to confine the execution of one task (and only one, as per the 1/ at the beginning of the line) to the GPU whose id is given in OAR_USER_GPUDEVICE, and the cores whose ids are given in OAR_USER_CPUSET (that's the cores that are associated to the GPU in the OAR resources definition).

Also note that the use of oarsh is set in the line, so the --ssh oarsh is not required in that case.

We use the -C+ option of oarprint, because GNU parallel does not like , as a separator for the OAR_USER_CPUSET values in the sshlogin file (oarsh accepts + like , or . or : indifferently).

One then just has to make GNU Parallel use that file:

- Confining to CPUs

In case only CPU matters, one can use:

- Confining to cores

For confinement to cores (warning: every core will come with its hyperthreading sibling, if any), one uses:

Typical usage and some examples

Typical coupling of GNU Parallel and OAR in a multi-node reservation

- Create a OAR job of 10 nodes

We create an interactive job for this example, so that the command below are executed in the opened job shell.

But note that all this can be scripted and passed to the oarsub command of a non-interactive OAR job.

- Run parallel with the --ssh and --sshlloginfile options

| Note | |

|---|---|

GNU Parallel has many, many, many features to pass | |

Example 1: Finding the best kernel parameters of a SVM algorithm

In this example, let's consider a Machine Learning problem in which we want to find the best kernel parameters of a SVM algorithm. To do that, we need first to build several training models configured with different kernel parameter values, and later to compute precision and recall for all such models to find the best one. On this example, we only focus on building all the training models.

We consider a python algorithm training.py that takes as argument the two kernel parameters for the SVM problem: --gamma and --c.

We want to generate the SVM models for:

- gamma values ranging in [0.1, 0.01, 0.001, 0.0001]

- C value ranging in [1, 10, 100, 1000]

The number of trainings corresponds to the cross-product of C values and gamma values. Considering the previous ranges, we need to build 16 SVM models. We can build the 16 SVM models iteratively but to speed up the process, especially for big data sets, it is better to parallelize and distribute the computation on multiple nodes.

Let's consider the cluster (mycluster) we want to use has nodes with 4 cores. As our SVM algorithm is mono-threaded, we need at least for 4 nodes to distribute the 16 computations. To do so, we first make an OAR reservation to book the 4 nodes of our cluster, and then we execute the following command to start the 4 computations on each of the 4 nodes:

Then, from the shell opened by oarsub on the head node of job:

node-1:

|

parallel --ssh oarsh --sshloginfile $OAR_FILE_NODES ./training.py --gamma {1} --c {2} ::: 0.1 0.01 0.001 0.0001 ::: 1 10 100 1000 |

As a result, this command line will execute (the order may be different):

- on node 1

./training.py --gamma 0.1 --c 1 - on node 1

./training.py --gamma 0.1 --c 10 - on node 1

./training.py --gamma 0.1 --c 100 - on node 1

./training.py --gamma 0.1 --c 1000 - on node 2

./training.py --gamma 0.01 --c 1 - on node 2

./training.py --gamma 0.01 --c 10 - on node 2

./training.py --gamma 0.01 --c 100 - on node 2

./training.py --gamma 0.01 --c 1000 - on node 3

./training.py --gamma 0.01 --c 1 - on node 3

./training.py --gamma 0.001 --c 10 - on node 3

./training.py --gamma 0.001 --c 100 - on node 3

./training.py --gamma 0.001 --c 1000 - on node 4

./training.py --gamma 0.001 --c 1 - on node 4

./training.py --gamma 0.001 --c 10 - on node 4

./training.py --gamma 0.001 --c 100 - on node 4

./training.py --gamma 0.001 --c 1000

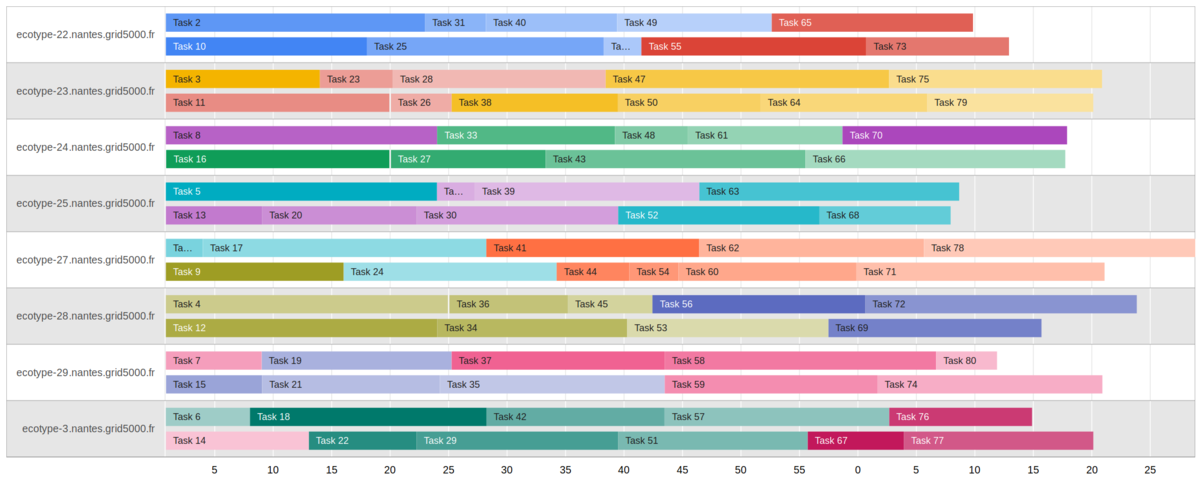

Example 2: Illustrating the execution of the GNU Parallel tasks in a gantt chart

In this example we use GNU Parallel and build a gantt diagram showing a timeline of execution of the GNU Parallel tasks, within a OAR job.

Below is an example of such a diagram. It illustrates the GNU Parallel tasks dispatch on the resources which were reserved previously in a OAR job. This somehow completes the OAR drawgantt display, by showing what is happening inside the OAR job.

Please find below how GNU Parallel is used in this cas, and the steps to build the diagram.

- Initiate your workspace

Our workspace is in the nantes frontend (any other site could do as well), in our ~/public directory, because we will open the gantt chart in the web browser at the end.

mkdir -p ~/public/parallel

cd ~/public/parallel

- Create the GNU Parallel task script "task.sh"

This is where the actual user program (e.g. numerical simulation, ...) is to be placed. Here for the example, we just basically sleep for a random time, from 4 to 25s. Parameter of the user program ($@) will be passed by GNU Parallel.

#!/bin/bash

DURATION=$(($RANDOM % 22 + 4))

echo Runing "$0 with params $@ on $(hostname)... (task will run for $DURATION s)."

sleep $DURATION

Here, for the example, we just basically sleep for a random time, from 4 to 25s.

- Create the OAR script "oarjob.sh"

#!/bin/bash

[ -z "$OAR_NODEFILE" ] && echo "Error: Not in a OAR job" 1>&2 && exit 1

cd ${0%/*}

TASK_COUNT=${1:-10}

seq $TASK_COUNT | parallel --joblog $PWD/parallel.log --bar --ssh oarsh --sshloginfile $OAR_NODEFILE --use-cpus-instead-of-cores $PWD/task.sh

Here we simply pass to parallel a list of parameters in its standard input, using the seq command. Thus, parameters are intergers from 1 to TASK_COUNT: TASK_COUNT tasks will be executed, each with as parameter one of those intergers. See GNU Parallel documentation for more details and other options.

We used the --use-cpus-instead-of-cores option of GNU Parallel, so that it will only run 2 tasks at a time on each node.

The script will start the GNU Parallel command from the head node of the OAR job, and generate the "parallel.log" file.

- Create the html/javascript code to render the gantt chart of the tasks in "ganttchart.html"

The parallel.log file is generated while GNU Parallel is executing the tasks. We create the ganttchart.html web page to render the gantt chart from it.

<html>

<head>

<script type="text/javascript" src="https://www.gstatic.com/charts/loader.js"></script>

<script type="text/javascript">

var queryDict = {'refresh': 3000};

location.search.substr(1).split("&").forEach(function(item) {queryDict[item.split("=")[0]] = item.split("=")[1]})

google.charts.load("current", {packages:["timeline"]});

google.charts.setOnLoadCallback(getLogs);

function getLogs(){

var request = new XMLHttpRequest();

request.open('GET', './parallel.log?_=' + new Date().getTime(), true); //force cache miss

request.responseType = 'text';

request.send(null);

request.onreadystatechange = function () {

if (request.readyState === 4 && request.status === 200) {

var lines = request.responseText.split('\n').filter(function(line) { return line.length > 0 && line.match(/^\d/); });

var data = lines.map(function(line) {

a = line.split('\t');

return [ a[1], "Task "+a[0], new Date(a[2]*1000), new Date(a[2]*1000+a[3]*1000) ];

});

data.sort((a, b) => (a[0] > b[0]) ? 1 : ((a[0] < b[0]) ? -1 : ((a[1] > b[1]) ? 1 : -1)));

var container = document.getElementById('gantt');

var chart = new google.visualization.Timeline(container);

var dataTable = new google.visualization.DataTable();

dataTable.addColumn({ type: 'string', id: 'Ressource' });

dataTable.addColumn({ type: 'string', id: 'JobId' });

dataTable.addColumn({ type: 'date', id: 'Start' });

dataTable.addColumn({ type: 'date', id: 'End' });

dataTable.addRows(data);

chart.draw(dataTable);

if (queryDict['refresh'] > 0) {

setTimeout(getLogs, queryDict['refresh']);

}

}

}

}

</script>

</head>

<body>

<div id="gantt" style="height: 100%;"></div>

</body>

</html>

- Submit the OAR job named OAR2parallel requesting 8 nodes of the ecotype cluster, and executing the oarjob.sh script.

oarsub -n OAR2parallel -l nodes=8 -p "cluster='ecotype'" "./oarjob.sh 80"

80 tasks to run: roughly 10 tasks per node, 5 per CPUs (bi-CPU nodes), but see in the gantt chart the actual tasks dispatch !

- Open the ganttchart.html web page

Open the web page in a web browser on your workstation:

- either using the Grid'5000 VPN, so that your can access the internal URL at: http://public.nantes.grid5000.fr/~YOUR_LOGIN/parallel/ganttchart.html

- or using the api reverse proxy service, at : https://api.grid5000.fr/stable/grid5000/sites/nantes/public/YOUR_LOGIN/parallel/ganttchart.html

(Mind replacing YOUR_LOGIN by your actual Grid'5000 user login).

The gantt chart will show up and refresh every 3 seconds while GNU Parallel executes tasks. This should be very alike the diagram shown at the beginning of this section.

- Bonus, show the progress

GNU Parallel progress (--bar option) is written to the OAR job stderr file, we can look at it in a loop:

OAR_JOB_ID=$(oarstat -u | grep OAR2parallel | cut -f 1 -d\ ) \

while oarstat -u | grep -q $OAR_JOB_ID; do

sleep 1 && [ -r OAR.OAR2parallel.$OAR_JOB_ID.stderr ] && cat OAR.OAR2parallel.$OAR_JOB_ID.stderr

done

28% 23:57=47s 39

Wait until it reaches 100% and the OAR job finishes.

Example 3: Confining GNU Parallel tasks to execute on single isolated GPU only

In this example, we run a batch of tasks, which each have to execute on a single GPU and making sure it does not access the GPU used by another task.

See the section above for some more details.

- First, we reserve a OAR Job with 2 nodes, which have 2 GPUs each.

We use the chifflet cluster in Lille.

Let's say we got chifflet-3 and chifflet-7.

- We generate the GNU Parallel sshlogin file, for the execution on every GPU

From the head node of the OAR job, chifflet-3, we use oarprint as follows to create the gpu-executors file which will be used as the GNU Parallel sshlogin file:

chifflet-3:

|

oarprint gpu -P gpudevice,cpuset,host -C+ -F "1/OAR_USER_GPUDEVICE=% OAR_USER_CPUSET=% oarsh %" | tee gpu-executors |

1/OAR_USER_GPUDEVICE=0 OAR_USER_CPUSET=20+18+14+12+16+0+6+26+24+22+10+4+2+8 oarsh chifflet-3.lille.grid5000.fr 1/OAR_USER_GPUDEVICE=0 OAR_USER_CPUSET=20+18+12+14+16+0+26+24+22+6+10+4+8+2 oarsh chifflet-7.lille.grid5000.fr 1/OAR_USER_GPUDEVICE=1 OAR_USER_CPUSET=25+13+27+11+9+15+23+19+1+21+17+5+7+3 oarsh chifflet-3.lille.grid5000.fr 1/OAR_USER_GPUDEVICE=1 OAR_USER_CPUSET=13+25+27+11+23+19+15+9+1+21+17+7+5+3 oarsh chifflet-7.lille.grid5000.fr

- We create the task script

~/script.sh

#!/bin/bash

echo ===============================================================================

echo "TASK: $@"

echo -n "BEGIN: "; date

echo -n "Host: "; hostname

echo -n "Threads: "; cat /sys/fs/cgroup/cpuset/$(< /proc/self/cpuset)/cpuset.cpus

echo -n "GPUs: "; nvidia-smi | grep -io -e " \(tesla\|geforce\)[^|]\+|[^|]\+" | sed 's/|/=/' | paste -sd+ -

sleep 3

echo -n "END: "; date

The script shows the threads and GPU used by a task.

We make the script executable:

- Finally, we run our GNU Parallel tasks

Here is the output:

===============================================================================

TASK: 1

BEGIN: Thu 27 Feb 2020 10:01:53 PM CET

Host: chifflet-3.lille.grid5000.fr

Threads: 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31,33,35,37,39,41,43,45,47,49,51,53,55

GPUs: GeForce GTX 108... Off = 00000000:82:00.0 Off

END: Thu 27 Feb 2020 10:01:56 PM CET

===============================================================================

TASK: 3

BEGIN: Thu 27 Feb 2020 10:01:53 PM CET

Host: chifflet-3.lille.grid5000.fr

Threads: 0,2,4,6,8,10,12,14,16,18,20,22,24,26,28,30,32,34,36,38,40,42,44,46,48,50,52,54

GPUs: GeForce GTX 108... Off = 00000000:03:00.0 Off

END: Thu 27 Feb 2020 10:01:56 PM CET

===============================================================================

TASK: 2

BEGIN: Thu 27 Feb 2020 10:01:53 PM CET

Host: chifflet-7.lille.grid5000.fr

Threads: 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31,33,35,37,39,41,43,45,47,49,51,53,55

GPUs: GeForce GTX 108... Off = 00000000:82:00.0 Off

END: Thu 27 Feb 2020 10:01:56 PM CET

===============================================================================

TASK: 4

BEGIN: Thu 27 Feb 2020 10:01:53 PM CET

Host: chifflet-7.lille.grid5000.fr

Threads: 0,2,4,6,8,10,12,14,16,18,20,22,24,26,28,30,32,34,36,38,40,42,44,46,48,50,52,54

GPUs: GeForce GTX 108... Off = 00000000:03:00.0 Off

END: Thu 27 Feb 2020 10:01:56 PM CET

===============================================================================

TASK: 5

BEGIN: Thu 27 Feb 2020 10:01:56 PM CET

Host: chifflet-3.lille.grid5000.fr

Threads: 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31,33,35,37,39,41,43,45,47,49,51,53,55

GPUs: GeForce GTX 108... Off = 00000000:82:00.0 Off

END: Thu 27 Feb 2020 10:01:59 PM CET

As expected, every task only has access to 1 GPU.