Grid5000:Home: Difference between revisions

No edit summary |

No edit summary |

||

| Line 6: | Line 6: | ||

<b>Grid'5000 is a precursor infrastructure of [http://www.slices-ri.eu SLICES-RI], Scientific Large Scale Infrastructure for Computing/Communication Experimental Studies.</b> | <b>Grid'5000 is a precursor infrastructure of [http://www.slices-ri.eu SLICES-RI], Scientific Large Scale Infrastructure for Computing/Communication Experimental Studies.</b> | ||

<br/> | <br/> | ||

Content on this website is partly outdated. Technical information | Content on this website is partly outdated. Technical information remains relevant. | ||

|} | |} | ||

Revision as of 08:50, 10 June 2025

|

Grid'5000 is a precursor infrastructure of SLICES-RI, Scientific Large Scale Infrastructure for Computing/Communication Experimental Studies.

|

|

Grid'5000 is a large-scale and flexible testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing, including Cloud, HPC, Big Data and AI. Key features:

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2935 overall):

- Wedan Emmanuel Gnibga, Anne Blavette, Anne-Cécile Orgerie. Energy-related Impact of Redefining Self-consumption for Distributed Edge Datacenters. IGSC 2024 - 15th International Green and Sustainable Computing Conference, Nov 2024, Austin, United States. pp.1-7, 10.1109/IGSC64514.2024.00011. hal-04770489 view on HAL pdf

- Léo Valque. 3D Snap rounding. Computer Science cs. Université de Lorraine, 2024. English. NNT : 2024LORR0337. tel-05016163 view on HAL pdf

- Rahma Hellali, Zaineb Chelly Dagdia, Karine Zeitouni. A Multi-Objective Multi-Agent Interactive Deep Reinforcement Learning Approach for Feature Selection. International conference on neural information processing, Dec 2024, Auckland (Nouvelle Zelande), New Zealand. pp.15. hal-04723314 view on HAL pdf

- François Lemaire, Louis Roussel. Parameter Estimation using Integral Equations. Maple Transactions, 2024, Proceedings of the Maple Conference 2023, 4 (1), 10.5206/mt.v4i1.17126. hal-04560846v2 view on HAL pdf

- Pierre Epron, Gaël Guibon, Miguel Couceiro. ORPAILLEUR SyNaLP at CLEF 2024 Task 2: Good Old Cross Validation for Large Language Models Yields the Best Humorous Detection. Working Notes of the Conference and Labs of the Evaluation Forum (CLEF 2024), Sep 2024, Grenoble, France. pp.1841-1856. hal-04696012 view on HAL pdf

Latest news

![]() Cluster Sasquatch is now in default queue at Grenoble

Cluster Sasquatch is now in default queue at Grenoble

We are pleased to announce that the Sasquatch [1] cluster is now available in the default queue.

Sasquatch is a cluster composed of 2 HPE RL300 nodes, each featuring:

This cluster was funded by the PEPR IA.

[1] https://www.grid5000.fr/w/Grenoble:Hardware#sasquatch

[2] https://amperecomputing.com/briefs/ampere-altra-family-product-brief

Best regards, Grid'5000 Technical Team

-- Grid'5000 Team 10:15, 11 February 2026 (CEST)

![]() Cluster Spirou is now in default queue at Louvain

Cluster Spirou is now in default queue at Louvain

We are pleased to announce that the Spirou[1] cluster of the newly installed Louvain site is now available in the default queue.

Spirou is a cluster composed of 8 Lenovo ThinkSystem SR630 V2 nodes, each featuring:

Be aware that we noticed I/Os inconsistencies on this cluster.

We advise users to take this into account when performing experimentations on the cluster. See the following bug for more information: https://intranet.grid5000.fr/bugzilla/show_bug.cgi?id=16938

This cluster was funded by the Fonds de la Recherche Scientifique – FNRS (F.R.S.–FNRS), and its operation is supported by F.R.S.–FNRS and the Wallonia region (SPW).

[1] https://www.grid5000.fr/w/Louvain:Hardware#spirou

Best regards,

Grid'5000 Technical Team

-- Grid'5000 Team 10:24, 12 January 2026 (CEST)

![]() End of support for centOS7/8 and centOSStream8 environments

End of support for centOS7/8 and centOSStream8 environments

Support for the centOS7/8 and centOSStream8 kadeploy environments is stopped due to the end of upstream support and compatibility issues with recent hardware.

The last version of the centOS7 environments (version 2024071117), centOS8 environments (version 2024071119), centOSStream8 environments (version 2024070316) will remain available on /grid5000. Older versions can still be accessed in the archive directory (see /grid5000/README.unmaintained-envs for more information).

-- Grid'5000 Team 08:44, 4 December 2025 (CEST)

![]() Ecotaxe cluster is now in default queue at Nantes

Ecotaxe cluster is now in default queue at Nantes

We are pleased to announce that the ecotaxe cluster of Nantes is now available in the default queue.

As a reminder, ecotaxe is a cluster composed of 2 HPE ProLiant DL385 Gen10 Plus v2 servers[1].

Each node features:

To submit a job on this cluster, the following command may be used:

oarsub -t exotic -p ecotaxe

This cluster is co-funded by Région Pays de la Loire, FEDER and REACT EU via the CPER SAMURAI [3].

[1] https://www.grid5000.fr/w/Nantes:Hardware#ecotaxe

[2] The observed throughput depends on multiple parameters such as the workload, the number of streams, ... [3] https://www.imt-atlantique.fr/fr/recherche-innovation/collaborer/projet/samurai

-- Grid'5000 Team 14:10, 02 December 2025 (CET)

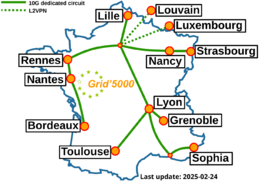

Grid'5000 sites

Current funding

INRIA |

CNRS |

UniversitiesIMT Atlantique |

Regional councilsAquitaine |