Grid5000:Home

|

Grid'5000 is a large-scale and flexible testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data and AI. Key features:

Grid'5000 is merging with FIT to build the SILECS Infrastructure for Large-scale Experimental Computer Science. Read an Introduction to SILECS (April 2018)

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2777 overall):

- Ismaël Tankeu, Geoffray Bonnin. Towards Characterising Induced Emotions: Exploiting Physiological Data and Investigating the Effect of Music Familiarity. MuRS 2024: 2nd Music Recommender Systems Workshop, Oct 2024, Bari, Italy. hal-04703972 view on HAL pdf

- Wedan Emmanuel Gnibga, Andrew A Chien, Anne Blavette, Anne-Cécile Orgerie. FlexCoolDC: Datacenter Cooling Flexibility for Harmonizing Water, Energy, Carbon, and Cost Trade-offs. e-Energy 2024 - 15th ACM International Conference on Future and Sustainable Energy Systems, Jun 2024, Singapore, Singapore. pp.108-122, 10.1145/3632775.3661936. hal-04581701 view on HAL pdf

- Mathieu Bacou. FaaSLoad : fine-grained performance and resource measurement for function-as-a-service. 2024. hal-04836444 view on HAL pdf

- Chaima Zoghlami. Enhancing V2X communication systems for cooperative perception : road users safety. Library and information sciences. Université Paul Sabatier - Toulouse III, 2023. English. NNT : 2023TOU30172. tel-04344780 view on HAL pdf

- Danilo Carastan-Santos, Georges da Costa, Millian Poquet, Patricia Stolf, Denis Trystram. Light-weight prediction for improving energy consumption in HPC platforms. Euro-Par 2024, Carretero, J., Shende, S., Garcia-Blas, J., Brandic, I., Olcoz, K., Schreiber, M., Aug 2024, Madrid, Spain. pp.152-165, 10.1007/978-3-031-69577-3_11. hal-04566184v2 view on HAL pdf

Latest news

Extension:RSS -- Error: "https://www.grid5000.fr/w?title=News&action=feed&feed=atom" is not in the list of allowed feeds. The allowed feeds are as follows: https://www.grid5000.fr/status/upcoming.atom, https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=rss, https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom and https://www.grid5000.fr/rss/G5KNews.php.

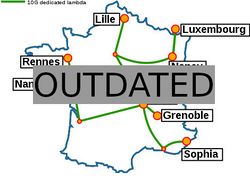

Grid'5000 sites

Current funding

As from June 2008, Inria is the main contributor to Grid'5000 funding.

INRIA |

CNRS |

UniversitiesUniversité Grenoble Alpes, Grenoble INP |

Regional councilsAquitaine |