Grid5000:Home

|

Grid'5000 is a large-scale and flexible testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data and AI. Key features:

Grid'5000 is merging with FIT to build the SILECS Infrastructure for Large-scale Experimental Computer Science. Read an Introduction to SILECS (April 2018)

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2780 overall):

- Clément Barthélemy, Francieli Zanon Boito, Emmanuel Jeannot, Guillaume Pallez, Luan Teylo. Implementation of an unbalanced I/O Bandwidth Management system in a Parallel File System. RR-9537, Inria. 2024. hal-04417412 view on HAL pdf

- Daniel Balouek, Hélène Coullon. Dynamic Adaptation of Urgent Applications in the Edge-to-Cloud Continuum. Euro-Par 2023: International European Conference on Parallel and Distributed Computing, Aug 2023, Limassol, Cyprus. pp.189-201, 10.1007/978-3-031-50684-0_15. hal-04387689 view on HAL pdf

- Clément Courageux-Sudan, Anne-Cécile Orgerie, Martin Quinson. Studying the end-to-end performance, energy consumption and carbon footprint of fog applications. ISCC 2024 - 29th IEEE Symposium on Computers and Communications, Jun 2024, Paris, France. pp.1-7. hal-04581677 view on HAL pdf

- Romaric Pegdwende Nikiema, Marcello Traiola, Angeliki Kritikakou. Impact of Compiler Optimizations on the Reliability of a RISC-V-based Core. DFT 2024 - 37th IEEE International Symposium on Defect and Fault Tolerance in VLSI and Nanotechnology Systems, Oct 2024, Oxfordshire, United Kingdom. pp.1-1. hal-04731794 view on HAL pdf

- Quentin Guilloteau. Control-based runtime management of HPC systems with support for reproducible experiments. Performance cs.PF. Université Grenoble Alpes 2020-.., 2023. English. NNT : 2023GRALM075. tel-04389290v2 view on HAL pdf

Latest news

Failed to load RSS feed from https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom: Error parsing XML for RSS

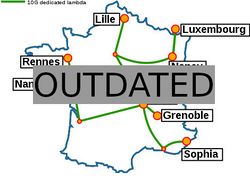

Grid'5000 sites

Current funding

As from June 2008, Inria is the main contributor to Grid'5000 funding.

INRIA |

CNRS |

UniversitiesUniversité Grenoble Alpes, Grenoble INP |

Regional councilsAquitaine |