Grid5000:Home

|

Grid'5000 is a large-scale and versatile testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data. Key features:

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2777 overall):

- Quentin Guilloteau, Florina M Ciorba, Millian Poquet, Dorian Goepp, Olivier Richard. Longevity of Artifacts in Leading Parallel and Distributed Systems Conferences: a Review of the State of the Practice in 2023. REP 2024 - ACM Conference on Reproducibility and Replicability, ACM, Jun 2024, Rennes, France. pp.1-14, 10.1145/3641525.3663631. hal-04562691 view on HAL pdf

- Maxime Gonthier, Samuel Thibault, Loris Marchal. A generic scheduler to foster data locality for GPU and out-of-core task-based applications. 2024. hal-04146714v2 view on HAL pdf

- Marina Botvinnik, Tomer Laor, Thomas Rokicki, Clémentine Maurice, Yossi Oren. The Finger in the Power: How to Fingerprint PCs by Monitoring their Power Consumption. DIMVA 2023 - 20th Conference on Detection of Intrusions and Malware & Vulnerability Assessment, Jul 2023, Hamburg, Germany. hal-04153854 view on HAL pdf

- Charles Bouillaguet, Ambroise Fleury, Pierre-Alain Fouque, Paul Kirchner. We are on the same side. Alternative sieving strategies for the number field sieve. ASIACRYPT 2023 - 29th International Conference on the Theory and Application of Cryptology and Information Security, Dec 2023, Guangzhou, China. pp.138-166, 10.1007/978-981-99-8730-6_5. hal-04112671 view on HAL pdf

- Houssam Elbouanani, Chadi Barakat, Walid Dabbous, Thierry Turletti. Fidelity-aware Large-scale Distributed Network Emulation. Computer Networks, 2024, 10.1016/j.comnet.2024.110531. hal-04591699 view on HAL pdf

Latest news

Failed to load RSS feed from https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom: Error parsing XML for RSS

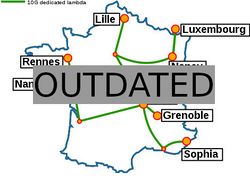

Grid'5000 sites

Current funding

As from June 2008, Inria is the main contributor to Grid'5000 funding.

INRIA |

CNRS |

UniversitiesUniversité Grenoble Alpes, Grenoble INP |

Regional councilsAquitaine |