Grid5000:Home: Difference between revisions

No edit summary |

No edit summary |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 4: | Line 4: | ||

|bgcolor="#f5fff5" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | |bgcolor="#f5fff5" style="border:1px solid #cccccc;padding:1em;padding-top:0.5em;"| | ||

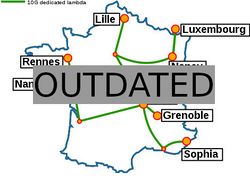

[[Image:renater5-g5k.jpg|thumbnail|250px|right|Grid'5000]] | [[Image:renater5-g5k.jpg|thumbnail|250px|right|Grid'5000]] | ||

'''Grid'5000 is a large-scale and | '''Grid'5000 is a large-scale and flexible testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data and IA.''' | ||

Key features: | Key features: | ||

| Line 15: | Line 15: | ||

<br> | <br> | ||

Read more about our [[Team|teams]], our [[Publications|publications]], and the [[Grid5000:UsagePolicy|usage policy]] of the testbed. Then [[Grid5000:Get_an_account|get an account]], and learn how to use the testbed with our [[Getting_Started|Getting Started tutorial]] and the rest of our [[:Category:Portal:User|Users portal]]. | Read more about our [[Team|teams]], our [[Publications|publications]], and the [[Grid5000:UsagePolicy|usage policy]] of the testbed. Then [[Grid5000:Get_an_account|get an account]], and learn how to use the testbed with our [[Getting_Started|Getting Started tutorial]] and the rest of our [[:Category:Portal:User|Users portal]]. | ||

<b>Grid'5000 is merging with [https://fit-equipex.fr FIT] to build the [http://www.silecs.net/ SILECS Infrastructure for Large-scale Experimental Computer Science]. Read [http://www.silecs.net/wp-content/uploads/2018/04/Desprez-SILECS.pdf an Introduction to SILECS] (April 2018)</b> | |||

<br> | <br> | ||

| Line 20: | Line 22: | ||

* [[Media:Grid5000.pdf|Presentation of Grid'5000]] (April 2019) | * [[Media:Grid5000.pdf|Presentation of Grid'5000]] (April 2019) | ||

* [https://www.grid5000.fr/mediawiki/images/Grid5000_science-advisory-board_report_2018.pdf Report from the Grid'5000 Science Advisory Board (2018)] | * [https://www.grid5000.fr/mediawiki/images/Grid5000_science-advisory-board_report_2018.pdf Report from the Grid'5000 Science Advisory Board (2018)] | ||

Older documents: | Older documents: | ||

* https://www.grid5000.fr/slides/2014-09-24-Cluster2014-KeynoteFD-v2.pdf Slides from Frederic Desprez's keynote at IEEE CLUSTER 2014 | * [https://www.grid5000.fr/slides/2014-09-24-Cluster2014-KeynoteFD-v2.pdf Slides from Frederic Desprez's keynote at IEEE CLUSTER 2014] | ||

* [https://www.grid5000.fr/ScientificCommittee/SAB%20report%20final%20short.pdf Report from the Grid'5000 Science Advisory Board (2014)] | * [https://www.grid5000.fr/ScientificCommittee/SAB%20report%20final%20short.pdf Report from the Grid'5000 Science Advisory Board (2014)] | ||

Revision as of 08:23, 4 October 2019

|

Grid'5000 is a large-scale and flexible testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data and IA. Key features:

Grid'5000 is merging with FIT to build the SILECS Infrastructure for Large-scale Experimental Computer Science. Read an Introduction to SILECS (April 2018)

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2780 overall):

- Chih-Kai Huang, Guillaume Pierre. Aggregate Monitoring for Geo-Distributed Kubernetes Cluster Federations. IEEE Transactions on Cloud Computing, 2024, 12 (4), pp.1449-1462. 10.1109/TCC.2024.3482574. hal-04736577 view on HAL pdf

- Sofía Callejas, Hernan Lira, Andrew Berry, Luis Martí, Nayat Sanchez-Pi. No Plankton Left Behind: Preliminary results on massive plankton image recognition. 1th Latin American High Performance Computing Conference - CARLA 2024, Ginés Guerrero; Jaime San Martín; Esteban Meneses; Carlos J Barrios H; Carla Osthoff; Jose M Monsalve Diaz, Sep 2024, Santiago de Chile, Chile. hal-04803602 view on HAL pdf

- Tom Hubrecht, Claude-Pierre Jeannerod, Paul Zimmermann. Towards a correctly-rounded and fast power function in binary64 arithmetic. 2023 IEEE 30th Symposium on Computer Arithmetic (ARITH 2023), Sep 2023, Portland, Oregon (USA), United States. hal-04326201 view on HAL pdf

- Shashikant Ilager, Daniel Balouek, Sidi Mohammed Kaddour, Ivona Brandic. Proteus: Towards Intent-driven Automated Resource Management Framework for Edge Sensor Nodes. FlexScience'24: Proceedings of the 14th Workshop on AI and Scientific Computing at Scale using Flexible Computing Infrastructures, Jun 2024, Pisa, Italy. pp.1 - 8, 10.1145/3659995.3660037. hal-04775138 view on HAL pdf

- Tristan Coignion, Clément Quinton, Romain Rouvoy. A Performance Study of LLM-Generated Code on Leetcode. EASE'24 - 28th International Conference on Evaluation and Assessment in Software Engineering, Jun 2024, Salerno, Italy. 10.1145/3661167.3661221. hal-04525620 view on HAL pdf

Latest news

Failed to load RSS feed from https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom: Error parsing XML for RSS

Grid'5000 sites

Current funding

As from June 2008, Inria is the main contributor to Grid'5000 funding.

INRIA |

CNRS |

UniversitiesUniversité Grenoble Alpes, Grenoble INP |

Regional councilsAquitaine |