|

|

| (155 intermediate revisions by 15 users not shown) |

| Line 1: |

Line 1: |

| {{Template:Site link|Network}} | | {{Template:Site link|Network}} |

| [[Image:Nancy-network.png]]

| | {{Portal|Network}} |

| | {{Portal|User}} |

|

| |

|

| __TOC__

| | '''See also:''' [[Nancy:Hardware|Hardware description for Nancy]] |

| Cluster's nodes are connected on an experimentation network, to compute, and on a management network, for their monitoring. The cluster is also interconnected to Grid'5000, to compute with nodes from the rest of the grid, and to Loria, to permit an access to the cluster from outside.

| |

|

| |

|

| = Experiment network = | | = Overview of Ethernet network topology = |

|

| |

|

| == Topology ==

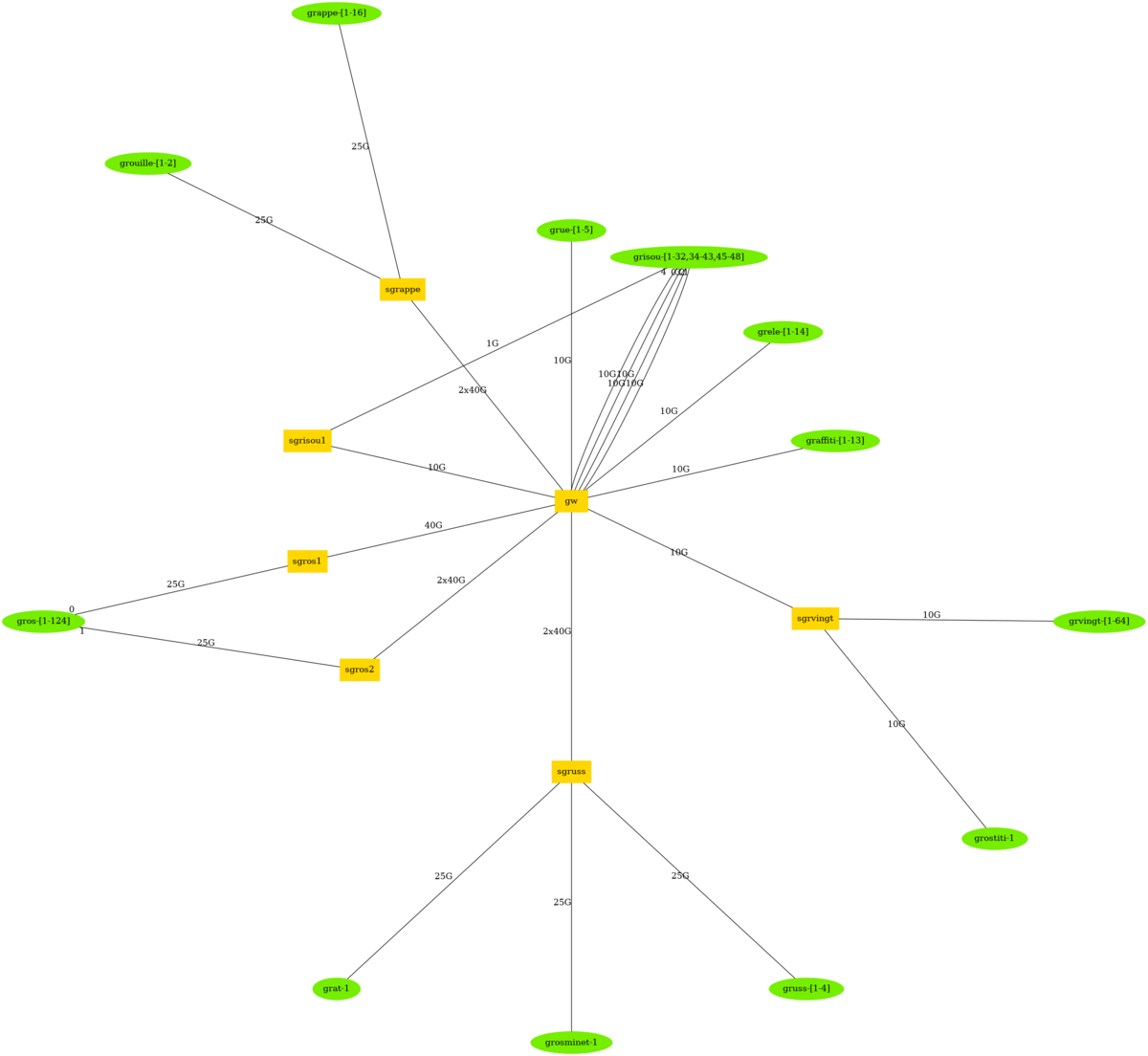

| | [[File:NancyNetwork.png|1200px]] |

|

| |

|

| [[Image:HP_ProCurve_3400cl48G.jpg|thumbnail|300px|right|HP ProCurve 3400cl-48G switch]]

| | {{:Nancy:GeneratedNetwork}} |

| [[Image:HP_ProCurve_2824.jpg|thumbnail|300px|right|HP ProCurve 2824 switch]]

| |

| [[Image:HP_ProCurve_2650.jpg|thumbnail|300px|right|HP ProCurve 2650 switch]]

| |

|

| |

|

| The experiments network is composed of two switches :

| | = HPC Networks = |

| * 48-port HP ProCurve 3400cl-48G, which receives Grid'5000 traffic

| |

| * 24-port HP ProCurve 2824, which receives Laboratory traffic

| |

|

| |

|

| All the compute nodes are connected to the 48-port switch, to avoid jump between switchs during an experiment. The service nodes are connected to the 24-port switch.

| | Several HPC Networks are available. |

|

| |

|

| The 48-port switch is equipped with a 10Gbit transceiver. It receives Grid'5000 traffic on this uplink from the others clusters of the grid. The 24-port switch is connected to the laboratory with one of its 1Gbit uplink.

| | == Omni-Path 100G on grele and grimani nodes == |

|

| |

|

| [[Image:nancy_experiments_topology.png|center|Nancy's experiment network topology]]

| | *<code class="host">grele-1</code> to <code class="host">grele-14</code> have one 100GB Omni-Path card. |

| | *<code class="host">grimani-1</code> to <code class="host">grimani-6</code> have one 100GB Omni-Path card. |

|

| |

|

| | * Card Model: Intel Omni-Path Host Fabric adaptateur series 100 1 Port PCIe x8 |

|

| |

|

| == Addressing == | | == Omni-Path 100G on grvingt nodes == |

| Compute nodes, service nodes and network switches possess an IP address on the experiment network. All these materials are independently numbered. Moreover the two network interfaces of the compute nodes are also independently numbered. So there is a service node n°1 and compute node n°1, with a n°1A for its first interface and a n°1B for its second. It is important that the IP address coroborates this numbering. So, the chosen IP network is <code class="host">172.28.52.0/22</code>.

| |

|

| |

|

| Address: 172.28.52.0 10101100.00011100.001101 00.00000000

| | There's another, separate Omni-Path network connecting the 64 grvingt nodes and some servers. The topology is a non blocking fat tree (1:1). |

| Netmask: 255.255.252.0 = 22 11111111.11111111.111111 00.00000000

| | Topology, generated from <code>opareports -o topology</code>: |

| Wildcard: 0.0.3.255 00000000.00000000.000000 11.11111111

| |

| =>

| |

| Network: 172.28.52.0/22 10101100.00011100.001101 00.00000000

| |

| HostMin: 172.28.52.1 10101100.00011100.001101 00.00000001

| |

| HostMax: 172.28.55.254 10101100.00011100.001101 11.11111110

| |

| Broadcast: 172.28.55.255 10101100.00011100.001101 11.11111111

| |

| Hosts/Net: 1022 Class B, Private Internet

| |

|

| |

|

| This network range is divided between the materials this way :

| | [[File:Topology-grvingt.png|400px]] |

| * <code class="host">172.28.52.[1-255]</code> are dedicated to this network switches

| |

| * <code class="host">172.28.53.[1-255]</code> are dedicated to service nodes

| |

| * <code class="host">172.28.54.[1-254]</code> are dedicated to compute nodes first interface

| |

| * <code class="host">172.28.55.[1-254]</code> are dedicated to compute nodes second interface

| |

|

| |

|

| | | More information about using Omni-Path with MPI is available from the [[Run_MPI_On_Grid%275000]] tutorial. |

| == Naming ==

| |

| Compute nodes, service nodes and network switches are named on the experiment network. Each possess 2 kind of name : one defined in the Grid'5000 specification and another one derived from the cluster name. These names contain the material numbering, which coroborates the IP address.

| |

|

| |

|

| === Switch === | | === Switch === |

| * <code class="host">switch[1-255].nancy.grid5000.fr</code>

| |

| * <code class="host">sgrillon[1-255].nancy.grid5000.fr</code>

| |

|

| |

|

| === Service node ===

| | * Infiniband Switch 4X DDR |

| * <code class="host">frontale[1-255].nancy.grid5000.fr</code> | | * Model based on [http://www.mellanox.com/content/pages.php?pg=products_dyn&product_family=16&menu_section=33 Infiniscale_III] |

| :'''Note:''' <code class="host">frontale.nancy.grid5000.fr</code> is also used for default service node.

| | * 1 commutation card Flextronics F-X43M204 |

| * <code class="host">fgrillon[1-255].nancy.grid5000.fr</code> | | * 12 line cards 4X 12 ports DDR Flextronics F-X43M203 |

| :'''Note:''' <code class="host">fgrillon.nancy.grid5000.fr</code> is also used for default service node.

| |

|

| |

|

| === Compute node === | | === Interconnection === |

| Each compute network interface possess its own name :

| |

| * <code class="host">node-[X]-a.nancy.grid5000.fr</code>

| |

| :'''Note:''' <code class="host">node-[X].nancy.grid5000.fr</code> is also used for the first interface.

| |

| * <code class="host">grillon-[X]-a.nancy.grid5000.fr</code>

| |

| :'''Note:''' <code class="host">grillon-[X].nancy.grid5000.fr</code> is also used for the first interface.

| |

| * <code class="host">node-[X]-b.nancy.grid5000.fr</code>

| |

| * <code class="host">grillon-[X]-b.nancy.grid5000.fr</code>

| |

|

| |

|

| | Infiniband network is physically isolated from Ethernet networks. Therefore, Ethernet network emulated over Infiniband is isolated as well. There isn't any interconnexion, neither at the L2 or L3 layers. |

|

| |

|

| | == Infiniband 56G on graphite/graoully/grimoire/grisou nodes == |

|

| |

|

| = Management network = | | *<code class="host">graoully-[1-16]</code> have one 56GB Infiniband card. |

| | *<code class="host">grimoire-[1-8]</code> have one 56GB Infiniband card. |

| | *<code class="host">graphite-[1-4]</code> have one 56GB Infiniband card. |

| | *<code class="host">grisou-[50-51]</code> have one 56GB Infiniband card. |

|

| |

|

| == Topology ==

| | * Card Model : Mellanox Technologies MT27500 Family [ConnectX-3] ( [http://www.mellanox.com/related-docs/user_manuals/ConnectX-3_VPI_Single_and_Dual_QSFP_Port_Adapter_Card_User_Manual.pdf ConnectX-3] ). |

| The management network is composed of one switch :

| | * Driver : <code class="dir">mlx4_core</code> |

| * 50-port HP ProCurve 2650

| | * OAR property : ib_rate='56' |

| | | * IP over IB addressing : <code class="host">graoully-[1-16]-ib0</code>.nancy.grid5000.fr ( 172.18.70.[1-16] ) |

| All compute nodes are connected to the management switch with their ''iLO'' management card. Service nodes are also connected to this switch with their second compute network interface. This way, you can access the management network from these service nodes.

| | * IP over IB addressing : <code class="host">grimoire-[1-8]-ib0</code>.nancy.grid5000.fr ( 172.18.71.[1-8] ) |

| | | * IP over IB addressing : <code class="host">graphite-[1-4]-ib0</code>.nancy.grid5000.fr ( 172.16.68.[9-12] ) |

| The service node ''iLO'' management cards are connected to the 24-port experiment switch. Thanks to this, these interfaces can be accessed from the [[#Loria interconnect|laboratory network]]. They are not part of the management network.

| | * IP over IB addressing : <code class="host">grisou-[50-51]-ib0</code>.nancy.grid5000.fr ( 172.16.72.[50-51] ) |

| | |

| [[Image:nancy_management_topology.png|center|Nancy's management network topology]]

| |

| | |

| | |

| == Addressing ==

| |

| Compute nodes, service nodes and the switch, that participates, possess an IP address on the management network. The numbering used is the same as the experiment network one. IP address keeps coroborate this numbering. So, the chosen IP network is <code class="host">172.28.152.0/22</code>.

| |

| | |

| Address: 172.28.152.0 10101100.00011100.100110 00.00000000

| |

| Netmask: 255.255.252.0 = 22 11111111.11111111.111111 00.00000000

| |

| Wildcard: 0.0.3.255 00000000.00000000.000000 11.11111111

| |

| =>

| |

| Network: 172.28.152.0/22 10101100.00011100.100110 00.00000000

| |

| HostMin: 172.28.152.1 10101100.00011100.100110 00.00000001

| |

| HostMax: 172.28.155.254 10101100.00011100.100110 11.11111110

| |

| Broadcast: 172.28.155.255 10101100.00011100.100110 11.11111111

| |

| Hosts/Net: 1022 Class B, Private Internet

| |

| | |

| This network range is divided between the materials in a similar way as experiment network does :

| |

| * <code class="host">172.28.152.[1-255]</code> are dedicated to this network switches

| |

| * <code class="host">172.28.153.[1-255]</code> are dedicated to service nodes

| |

| * <code class="host">172.28.154.[1-254]</code> are dedicated to compute nodes | |

| | |

| | |

| == Naming ==

| |

| Compute nodes, service nodes and network switches are named on the experiment network. Each possess only one name, derived from cluster name in a manner to keep in mind that the interface is dedicated to management tasks. Names contain the material numbering, which coroborates the IP address.

| |

| | |

| * <code class="host">sgrillade[1-255].nancy.grid5000.fr</code> are dedicated to this network switches | |

| * <code class="host">fgrillade[1-255].nancy.grid5000.fr</code> are dedicated to service nodes

| |

| * <code class="host">grillade-[1-255].nancy.grid5000.fr</code> are dedicated to compute nodes | |

| | |

| | |

| | |

| = Grid'5000 interconnect =

| |

| | |

| == Topology ==

| |

| Connection to others Grid'5000 sites uses the national research network, called [http://www.renater.fr Renater]. This network uses the 10Gbit Ethernet technology.

| |

| | |

| [[Image:nancy_g5k_interconnect.png|center|Nancy Grid'5000 interconnect]]

| |

| | |

| The local Renater site is managed by the [http://www.ciril.fr CIRIL], which is located at 2 km from the Loria, which hosts Nancy's Grid'5000 cluster. This distance implies the use of a fiber medium with the 10GBASE-LR technology. The 48-port experiment network switch, which is equipped with a 10GbE LR transceiver, takes in charge the Grid'5000 interconnect.

| |

| | |

| Fiber linking to the CIRIL is not direct. Firstly, fiber goes down to the Faculté des Sciences. After that, it goes to Loria. Finally, it arrives on the cluster.

| |

| | |

| [[Image:nancy_g5k_linking.png|center|Nancy's Grid'5000 linking]] | |

| | |

| '''Note''': All the used fiber cables are dedicated to our Grid'5000 interconnect. When it is said ''trunk'' on the above figure it means a ''more rigid garter''.

| |

| | |

| == Addressing ==

| |

| Not yet defined

| |

| | |

| | |

| == Naming ==

| |

| There is no specific naming for the Grid'5000 interconnect, because the experiment network names are used on the entire grid.

| |

| | |

| | |

| | |

| = Loria interconnect =

| |

| | |

| == Topology ==

| |

| The Loria laboratory possess a 1Gbit Ethernet network. Service nodes of the cluster are connected to this network. So the 24-port experiment switch, which connects service nodes, makes the uplink to this network.

| |

| | |

| [[Image:nancy_loria_interconnect.png|center|Nancy's Loria interconnect]]

| |

| | |

| Nancy's Grid'5000 cluster is hosted in Loria building. So cabling distance is short. 1000BASE-T technology is used with an RJ-45 CAT6 cable. The 24-port experiment switch is directly linked to the Loria router.

| |

| | |

| [[Image:nancy_loria_linking.png|center|Nancy's Loria linking]]

| |

|

| |

|

| | === Switch === |

|

| |

|

| == Addressing ==

| | * 36-port Mellanox InfiniBand SX6036 |

| Loria uses a public IP address range for its materials : <code class="host">152.81.0.0/16</code>. Within this range, the subnet <code class="host">152.81.45.0/24</code> is dedicated to Grid'5000 cluster:

| | * [http://www.mellanox.com/page/products_dyn?product_family=132 Documentation] |

| * <code class="host">152.81.45.[101-1??]</code> are dedicated to service node first experiment interface | | * 36 FDR (56Gb/s) ports in a 1U switch |

| * <code class="host">152.81.44.[201-2??]</code> are dedicated to service node management interface | | * 4.032Tb/s switching capacity |

| | * FDR/FDR10 support for Forward Error Correction (FEC) |

|

| |

|

| {{Note|text=Service node first experiment interfaces possess 2 IP addresses:

| | === Interconnection === |

| * <code>eth0</code> for cluster's intraconnect: <code>172.28.53.[1-X]</code>

| |

| * <code>eth0:0</code> for Loria's interconnect: <code>152.81.45.[101-1XX]</code>}}

| |

|

| |

|

| == Naming ==

| | Infiniband network is physically isolated from Ethernet networks. Therefore, Ethernet network emulated over Infiniband is isolated as well. There isn't any interconnexion, neither at the L2 or L3 layers. |

| Service node interfaces connected to Loria network are also named on it. These names are gathered into <code class="domain">grid5000.loria.fr</code> subdomain :

| |

| * <code class="host">fgrillon[1-??].grid5000.loria.fr</code> are dedicated to service node second experiment interface

| |

| :'''Note:''' <code class="host">fgrillon.grid5000.loria.fr</code>, <code class="host">grillon.grid5000.loria.fr</code> and <code class="host">acces.nancy.grid5000.fr</code> are also used for default service node.

| |

| * <code class="host">fgrillade[1-??].grid5000.loria.fr</code> are dedicated to service node management interface

| |