Grid5000:Home

|

Grid'5000 is a large-scale and versatile testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data. Key features:

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2777 overall):

- Ismaël Tankeu, Geoffray Bonnin. Towards Characterising Induced Emotions: Exploiting Physiological Data and Investigating the Effect of Music Familiarity. MuRS 2024: 2nd Music Recommender Systems Workshop, Oct 2024, Bari, Italy. hal-04703972 view on HAL pdf

- Liam Cripwell, Joël Legrand, Claire Gardent. Simplicity Level Estimate (SLE): A Learned Reference-Less Metric for Sentence Simplification. 2023 Conference on Empirical Methods in Natural Language Processing, Dec 2023, Singapore, Singapore. pp.12053-12059, 10.18653/v1/2023.emnlp-main.739. hal-04369824 view on HAL pdf

- Maxime Méloux, Christophe Cerisara. Novel-WD: Exploring acquisition of Novel World Knowledge in LLMs Using Prefix-Tuning. 2023. hal-04269919 view on HAL pdf

- Melvin Chelli, Cédric Prigent, René Schubotz, Alexandru Costan, Gabriel Antoniu, et al.. FedGuard: Selective Parameter Aggregation for Poisoning Attack Mitigation in Federated Learning. Cluster 2023 - IEEE International Conference on Cluster Computing, Oct 2023, Santa Fe, New Mexico, United States. pp.1-10, 10.1109/CLUSTER52292.2023.00014. hal-04208787 view on HAL pdf

- Louis-Claude Canon, Anthony Dugois, Loris Marchal, Etienne Rivière. Hector: A Framework to Design and Evaluate Scheduling Strategies in Persistent Key-Value Stores. ICPP 2023 - 52nd International Conference on Parallel Processing, Aug 2023, Salt Lake City, United States. hal-04158577 view on HAL pdf

Latest news

Failed to load RSS feed from https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom: Error parsing XML for RSS

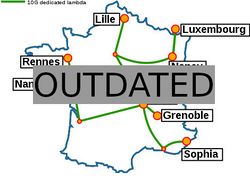

Grid'5000 sites

Current funding

As from June 2008, Inria is the main contributor to Grid'5000 funding.

INRIA |

CNRS |

UniversitiesUniversité Grenoble Alpes, Grenoble INP |

Regional councilsAquitaine |