Grid5000:Home

|

Grid'5000 is a large-scale and versatile testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data. Key features:

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2777 overall):

- Diego Amaya-Ramirez. Data science approach for the exploration of HLA antigenicity based on 3D structures and molecular dynamics. Bioinformatics q-bio.QM. Université de Lorraine, 2024. English. NNT : 2024LORR0071. tel-04708399 view on HAL pdf

- Guillaume Schreiner, Pierre Neyron. SLICES-FR : l’infrastructure de recherche nationale pour l’expérimentation Cloud et Réseaux du futur. JRES 2024 - Journées réseaux de l'enseignement et de la recherche, Renater, Dec 2024, Rennes, France. pp.1-15. hal-04893845 view on HAL pdf

- Barbara Gendron, Gaël Guibon. SEC: Context-Aware Metric Learning for Efficient Emotion Recognition in Conversation. Proceedings of the 14th Workshop on Computational Approaches to Subjectivity, Sentiment, & Social Media Analysis (WASSA at ACL 2024), Aug 2024, Bangkok, Thailand. hal-04702997 view on HAL pdf

- Gaël Vila, Emmanuel Medernach, Inés Gonzalez, Axel Bonnet, Yohan Chatelain, et al.. The Impact of Hardware Variability on Applications Packaged with Docker and Guix: a Case Study in Neuroimaging. ACM REP'24, ACM, Jun 2024, Rennes, France. pp.75-84, 10.1145/3641525.3663626. hal-04480308v2 view on HAL pdf

- Etienne Delort, Laura Riou, Anukriti Srivastava. Environmental Impact of Artificial Intelligence. INRIA; CEA Leti. 2023, pp.1-33. hal-04283245 view on HAL pdf

Latest news

Failed to load RSS feed from https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom: Error parsing XML for RSS

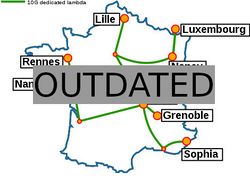

Grid'5000 sites

Current funding

As from June 2008, Inria is the main contributor to Grid'5000 funding.

INRIA |

CNRS |

UniversitiesUniversité Grenoble Alpes, Grenoble INP |

Regional councilsAquitaine |