Grid5000:Home

|

Grid'5000 is a large-scale and versatile testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data. Key features:

|

Five random publications that benefited from Grid'5000 (at least 2779 overall):

- Wedan Emmanuel Gnibga, Andrew A Chien, Anne Blavette, Anne-Cécile Orgerie. FlexCoolDC: Datacenter Cooling Flexibility for Harmonizing Water, Energy, Carbon, and Cost Trade-offs. e-Energy 2024 - 15th ACM International Conference on Future and Sustainable Energy Systems, Jun 2024, Singapore, Singapore. pp.108-122, 10.1145/3632775.3661936. hal-04581701 view on HAL pdf

- Louis Delebecque, Romain Serizel. BinauRec: A dataset to test the influence of the use of room impulse responses on binaural speech enhancement. EUSIPCO23, EURASIP, Sep 2023, Helsiinki, Finland. 10.23919/EUSIPCO58844.2023.10289772. hal-04193377 view on HAL pdf

- Thomas Firmin, Pierre Boulet, El-Ghazali Talbi. Parallel Hyperparameter Optimization Of Spiking Neural Networks. 2023. hal-04464394 view on HAL pdf

- Eva Giboulot, Teddy Furon. WaterMax: breaking the LLM watermark detectability-robustness-quality trade-off. NeurIPS 2024 - 38th Conference on Neural Information Processing Systems, Dec 2024, Vancouver, Canada. pp.1-34. hal-04766606 view on HAL pdf

- Can Cui, Imran Ahamad Sheikh, Mostafa Sadeghi, Emmanuel Vincent. End-to-end Multichannel Speaker-Attributed ASR: Speaker Guided Decoder and Input Feature Analysis. 2023 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU 2023), Dec 2023, Taipei, Taiwan. 10.1109/ASRU57964.2023.10389729. hal-04235774 view on HAL pdf

Latest news

Failed to load RSS feed from https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom: Error parsing XML for RSS

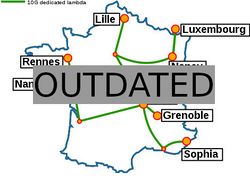

Grid'5000 sites

Current funding

As from June 2008, Inria is the main contributor to Grid'5000 funding.

INRIA |

CNRS |

UniversitiesUniversité Grenoble Alpes, Grenoble INP |

Regional councilsAquitaine |