|

Grid'5000 is a large-scale and versatile testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data.

Key features:

- provides access to a large amount of resources: 1000 nodes, 8000 cores, grouped in homogeneous clusters, and featuring various technologies: 10G Ethernet, Infiniband, GPUs, Xeon PHI

- highly reconfigurable and controllable: researchers can experiment with a fully customized software stack thanks to bare-metal deployment features, and can isolate their experiment at the networking layer

- advanced monitoring and measurement features for traces collection of networking and power consumption, providing a deep understanding of experiments

- designed to support Open Science and reproducible research, with full traceability of infrastructure and software changes on the testbed

- a vibrant community of 500+ users supported by a solid technical team

Read more about our teams, our publications, and the usage policy of the testbed. Then get an account, and learn how to use the testbed with our Getting Started tutorial and the rest of our Users portal.

Recently published documents:

Grid'5000 is supported by a scientific interest group (GIS) hosted by Inria and including CNRS, RENATER and several Universities as well as other organizations. Inria has been supporting Grid'5000 through ADT ALADDIN-G5K (2007-2013), ADT LAPLACE (2014-2016), and IPL HEMERA (2010-2014).

|

Current status

(at 2025-07-05 23:54):

3 current events, 6 planned (details)

Latest news

Failed to load RSS feed from https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom: Error parsing XML for RSS

Read more news

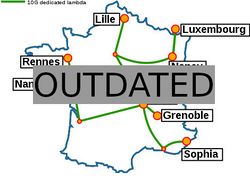

Grid'5000 sites

Current funding

As from June 2008, INRIA is the main contributor to Grid'5000 funding.

INRIA

|

CNRS

|

Universities

Université Grenoble Alpes, Grenoble INP

Université Rennes 1, Rennes

Institut National Polytechnique de Toulouse / INSA / FERIA / Université Paul Sabatier, Toulouse

Université Bordeaux 1, Bordeaux

Université Lille 1, Lille

École Normale Supérieure, Lyon

|

Regional councils

Aquitaine

Bretagne

Champagne-Ardenne

Provence Alpes Côte d'Azur

Nord Pas de Calais

Lorraine

|