Grid5000:Home: Difference between revisions

No edit summary |

No edit summary |

||

| Line 82: | Line 82: | ||

===Regional councils=== | ===Regional councils=== | ||

Aquitaine<br/> | Aquitaine<br/> | ||

Auvergne-Rhône-Alpes<br/> | |||

Bretagne<br/> | Bretagne<br/> | ||

Champagne-Ardenne<br/> | Champagne-Ardenne<br/> | ||

Revision as of 18:54, 13 December 2018

|

Grid'5000 is a large-scale and versatile testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data. Key features:

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2779 overall):

- Alaaeddine Chaoub. Deep learning representations for prognostics and health management. Computer Science cs. Université de Lorraine, 2024. English. NNT : 2024LORR0057. tel-04687618 view on HAL pdf

- Lucas Leandro Nesi. Strategies for Distributing Task-Based Applications on Heterogeneous Platforms. Distributed, Parallel, and Cluster Computing cs.DC. Université Grenoble Alpes 2020-..; Universidade Federal do Rio Grande do Sul (Porto Alegre, Brésil), 2023. English. NNT : 2023GRALM048. tel-04468314 view on HAL pdf

- Thomas Tournaire, Hind Castel-Taleb, Emmanuel Hyon. Efficient Computation of Optimal Thresholds in Cloud Auto-scaling Systems. ACM Transactions on Modeling and Performance Evaluation of Computing Systems, 2023, 8 (4), pp.9. 10.1145/3603532. hal-04176076 view on HAL pdf

- Cherif Latreche, Nikos Parlavantzas, Hector A Duran-Limon. FoRLess: A Deep Reinforcement Learning-based approach for FaaS Placement in Fog. UCC 2024 - 17th IEEE/ACM International Conference on Utility and Cloud Computing, Dec 2024, Sharjah, United Arab Emirates. pp.1-9. hal-04791252 view on HAL pdf

- Barbara Gendron, Gaël Guibon. SEC: Context-Aware Metric Learning for Efficient Emotion Recognition in Conversation. Proceedings of the 14th Workshop on Computational Approaches to Subjectivity, Sentiment, & Social Media Analysis (WASSA at ACL 2024), Aug 2024, Bangkok, Thailand. hal-04702997 view on HAL pdf

Latest news

Failed to load RSS feed from https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom: Error parsing XML for RSS

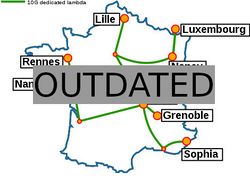

Grid'5000 sites

Current funding

As from June 2008, Inria is the main contributor to Grid'5000 funding.

INRIA |

CNRS |

UniversitiesUniversité Grenoble Alpes, Grenoble INP |

Regional councilsAquitaine |