Grid5000:Home: Difference between revisions

No edit summary |

No edit summary |

||

| Line 7: | Line 7: | ||

Key features: | Key features: | ||

* provides '''access to a large amount of resources''': | * provides '''access to a large amount of resources''': 12000 cores, 800 compute-nodes grouped in homogeneous clusters, and featuring various technologies: GPU, SSD, NVMe, 10G Ethernet, Infiniband, Omnipath | ||

* '''highly reconfigurable and controllable''': researchers can experiment with a fully customized software stack thanks to bare-metal deployment features, and can isolate their experiment at the networking layer | * '''highly reconfigurable and controllable''': researchers can experiment with a fully customized software stack thanks to bare-metal deployment features, and can isolate their experiment at the networking layer | ||

* '''advanced monitoring and measurement features for traces collection of networking and power consumption''', providing a deep understanding of experiments | * '''advanced monitoring and measurement features for traces collection of networking and power consumption''', providing a deep understanding of experiments | ||

Revision as of 09:23, 18 September 2018

|

Grid'5000 is a large-scale and versatile testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data. Key features:

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2777 overall):

- Adrien Schoen, Gregory Blanc, Pierre-François Gimenez, Yufei Han, Frédéric Majorczyk, et al.. A tale of two methods: unveiling the limitations of GAN and the rise of bayesian networks for synthetic network traffic generation. 2024 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Jul 2024, Vienna, Austria. pp.273-286, 10.1109/EuroSPW61312.2024.00036. hal-04871298 view on HAL pdf

- Wèdan Emmanuel Gnibga. Modeling and optimization of Edge infrastructures and their electrical systems. Databases cs.DB. Université de Rennes, 2024. English. NNT : 2024URENS069. tel-04967447 view on HAL pdf

- Louis-Claude Canon, Anthony Dugois, Loris Marchal, Etienne Rivière. Hector: A Framework to Design and Evaluate Scheduling Strategies in Persistent Key-Value Stores. ICPP 2023 - 52nd International Conference on Parallel Processing, Aug 2023, Salt Lake City, United States. hal-04158577 view on HAL pdf

- Chih-Kai Huang, Guillaume Pierre. Aggregate Monitoring for Geo-Distributed Kubernetes Cluster Federations. IEEE Transactions on Cloud Computing, 2024, 12 (4), pp.1449-1462. 10.1109/TCC.2024.3482574. hal-04736577 view on HAL pdf

- Hugo Pompougnac, Alban Dutilleul, Christophe Guillon, Nicolas Derumigny, Fabrice Rastello. Performance bottlenecks detection through microarchitectural sensitivity. Institut National de Recherche en Informatique et en Automatique (INRIA). 2024, pp.1-15. hal-04796942 view on HAL pdf

Latest news

Failed to load RSS feed from https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom: Error parsing XML for RSS

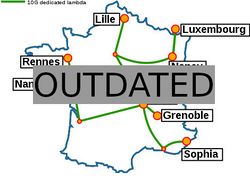

Grid'5000 sites

Current funding

As from June 2008, Inria is the main contributor to Grid'5000 funding.

INRIA |

CNRS |

UniversitiesUniversité Grenoble Alpes, Grenoble INP |

Regional councilsAquitaine |