Grid5000:Home: Difference between revisions

No edit summary |

Ddelabroye (talk | contribs) No edit summary |

||

| Line 85: | Line 85: | ||

Champagne-Ardenne<br/> | Champagne-Ardenne<br/> | ||

Provence Alpes Côte d'Azur<br/> | Provence Alpes Côte d'Azur<br/> | ||

Hauts de France<br/> | |||

Lorraine<br/> | Lorraine<br/> | ||

|} | |} | ||

Revision as of 14:26, 2 August 2018

|

Grid'5000 is a large-scale and versatile testbed for experiment-driven research in all areas of computer science, with a focus on parallel and distributed computing including Cloud, HPC and Big Data. Key features:

Older documents:

|

Random pick of publications

Five random publications that benefited from Grid'5000 (at least 2777 overall):

- Alexandre Sabbadin, Abdel Kader Chabi Sika Boni, Hassan Hassan, Khalil Drira. Optimizing network slice placement using Deep Reinforcement Learning (DRL) on a real platform operated by Open Source MANO (OSM). Tunisian-Algerian Conference on Applied Computing (TACC 2023), Nov 2023, Sousse, Tunisia. hal-04265140 view on HAL pdf

- Ophélie Renaud, Karol Desnos, Erwan Raffin, Jean-François Nezan. Multicore and Network Topology Codesign for Pareto-Optimal Multinode Architecture. EUSIPCO, EURASIP, Aug 2024, Lyon, France. pp.701-705, 10.23919/EUSIPCO63174.2024.10715023. hal-04608249 view on HAL pdf

- Maxime Agusti, Eddy Caron, Benjamin Fichel, Laurent Lefèvre, Olivier Nicol, et al.. PowerHeat: A non-intrusive approach for estimating the power consumption of bare metal water-cooled servers. 2024 IEEE International Conferences on Internet of Things (iThings) and IEEE Green Computing & Communications (GreenCom) and IEEE Cyber, Physical & Social Computing (CPSCom) and IEEE Smart Data (SmartData) and IEEE Congress on Cybermatics, Aug 2024, Copenhagen, Denmark. pp.1-7. hal-04662683 view on HAL pdf

- Jad El Karchi, Hanze Chen, Ali Tehranijamsaz, Ali Jannesari, Mihail Popov, et al.. MPI Errors Detection using GNN Embedding and Vector Embedding over LLVM IR. IPDPS 2024 - 38th International Symposium on Parallel and Distributed Processing, May 2024, San francisco, United States. hal-04724011 view on HAL pdf

- Ali Golmakani, Mostafa Sadeghi, Xavier Alameda-Pineda, Romain Serizel. A weighted-variance variational autoencoder model for speech enhancement. ICASSP 2024 - International Conference on Acoustics Speech and Signal Processing, IEEE, Apr 2024, Seoul (Korea), South Korea. pp.1-5, 10.1109/ICASSP48485.2024.10446294. hal-03833827v2 view on HAL pdf

Latest news

Failed to load RSS feed from https://www.grid5000.fr/mediawiki/index.php?title=News&action=feed&feed=atom: Error parsing XML for RSS

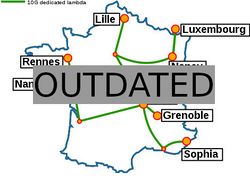

Grid'5000 sites

Current funding

As from June 2008, Inria is the main contributor to Grid'5000 funding.

INRIA |

CNRS |

UniversitiesUniversité Grenoble Alpes, Grenoble INP |

Regional councilsAquitaine |