Nancy:Network: Difference between revisions

Jump to navigation

Jump to search

No edit summary |

|||

| (105 intermediate revisions by 13 users not shown) | |||

| Line 1: | Line 1: | ||

{{Template:Site link|Network}} | {{Template:Site link|Network}} | ||

{{Portal|Network}} | {{Portal|Network}} | ||

{{ | {{Portal|User}} | ||

'''See also:''' [[Nancy:Hardware|Hardware description for Nancy]] | |||

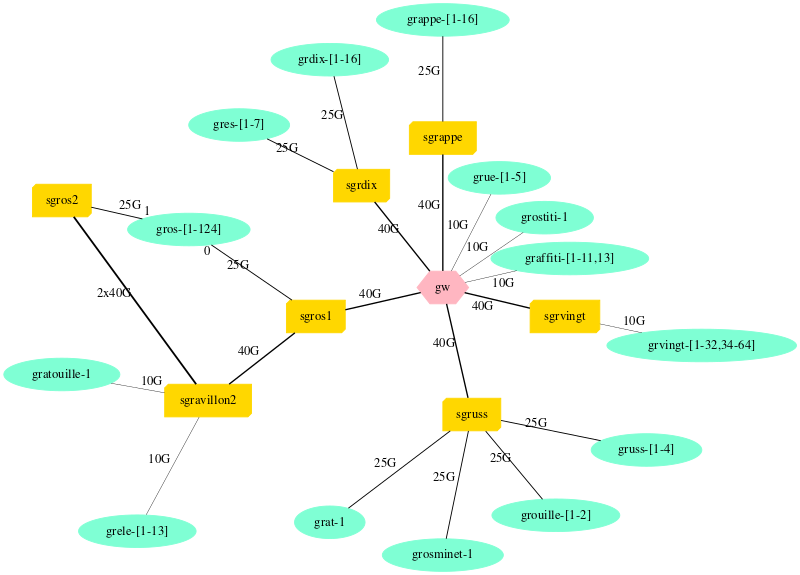

= Overview of Ethernet network topology = | |||

[[File:NancyNetwork.svg|800px]] | |||

{{:Nancy:GeneratedNetwork}} | |||

= | = HPC Networks = | ||

Several HPC Networks are available. | |||

= | == Omni-Path 100G on grele and grimani nodes == | ||

== | *<code class="host">grele-1</code> to <code class="host">grele-14</code> have one 100GB Omni-Path card. | ||

*<code class="host">grimani-1</code> to <code class="host">grimani-6</code> have one 100GB Omni-Path card. | |||

* Card Model: Intel Omni-Path Host Fabric adaptateur series 100 1 Port PCIe x8 | |||

== | == Omni-Path 100G on grvingt nodes == | ||

The | There's another, separate Omni-Path network connecting the 64 grvingt nodes and some servers. The topology is a non blocking fat tree (1:1). | ||

Topology, generated from <code>opareports -o topology</code>: | |||

[[ | [[File:Topology-grvingt.png|400px]] | ||

More information about using Omni-Path with MPI is available from the [[Run_MPI_On_Grid%275000]] tutorial. | |||

'''NB: OPA (Omni-Path Architecture) is currently not supported on Debian 12 environment.''' | |||

=== Switch === | |||

* Infiniband Switch 4X DDR | * Infiniband Switch 4X DDR | ||

* Model based on [http://www.mellanox.com/content/pages.php?pg=products_dyn&product_family=16&menu_section=33 Infiniscale_III] | * Model based on [http://www.mellanox.com/content/pages.php?pg=products_dyn&product_family=16&menu_section=33 Infiniscale_III] | ||

* 1 commutation card Flextronics F-X43M204 | * 1 commutation card Flextronics F-X43M204 | ||

* | * 12 line cards 4X 12 ports DDR Flextronics F-X43M203 | ||

= | === Interconnection === | ||

== | |||

Omnipath network is physically isolated from Ethernet networks. Therefore, Ethernet network emulated over Infiniband is isolated as well. There isn't any interconnexion, neither at the L2 or L3 layers. | |||

Latest revision as of 07:22, 19 June 2024

Network: Global | Grenoble | Lille | Louvain | Luxembourg | Lyon | Nancy | Nantes | Rennes | Sophia | Strasbourg | Toulouse

See also: Hardware description for Nancy

Overview of Ethernet network topology

Network devices models

- gw: Aruba 8325-48Y8C JL635A

- sgrappe: Dell S5224F-ON

- sgravillon2: Cisco Nexus 9508

- sgrdix: Aruba 8325-48Y8C

- sgrdixib: Mellanox QM8700

- sgros1: Dell Z9264F-ON

- sgros2: Dell Z9264F-ON

- sgruss: Dell S5224F-ON

- sgrvingt: Dell S4048

- sw-1: S4128F-ON

More details (including address ranges) are available from the Grid5000:Network page.

HPC Networks

Several HPC Networks are available.

Omni-Path 100G on grele and grimani nodes

grele-1togrele-14have one 100GB Omni-Path card.grimani-1togrimani-6have one 100GB Omni-Path card.

- Card Model: Intel Omni-Path Host Fabric adaptateur series 100 1 Port PCIe x8

Omni-Path 100G on grvingt nodes

There's another, separate Omni-Path network connecting the 64 grvingt nodes and some servers. The topology is a non blocking fat tree (1:1).

Topology, generated from opareports -o topology:

More information about using Omni-Path with MPI is available from the Run_MPI_On_Grid'5000 tutorial.

NB: OPA (Omni-Path Architecture) is currently not supported on Debian 12 environment.

Switch

- Infiniband Switch 4X DDR

- Model based on Infiniscale_III

- 1 commutation card Flextronics F-X43M204

- 12 line cards 4X 12 ports DDR Flextronics F-X43M203

Interconnection

Omnipath network is physically isolated from Ethernet networks. Therefore, Ethernet network emulated over Infiniband is isolated as well. There isn't any interconnexion, neither at the L2 or L3 layers.