Strasbourg Core-Network XP Infra: Difference between revisions

(→Todo) |

No edit summary |

||

| Line 2: | Line 2: | ||

== Core network topology emulation == | == Core network topology emulation == | ||

Let users design topologies of | Goal: Let users design topologies of interconnection between core network experimentation switches and other server nodes. | ||

Below on the left, is an example of emulated network topology. On the right, is the associated real network topology. | Thanks to an infrastructure ("mesh") switch, on-demand network links can be created between experimentation switches and server nodes. | ||

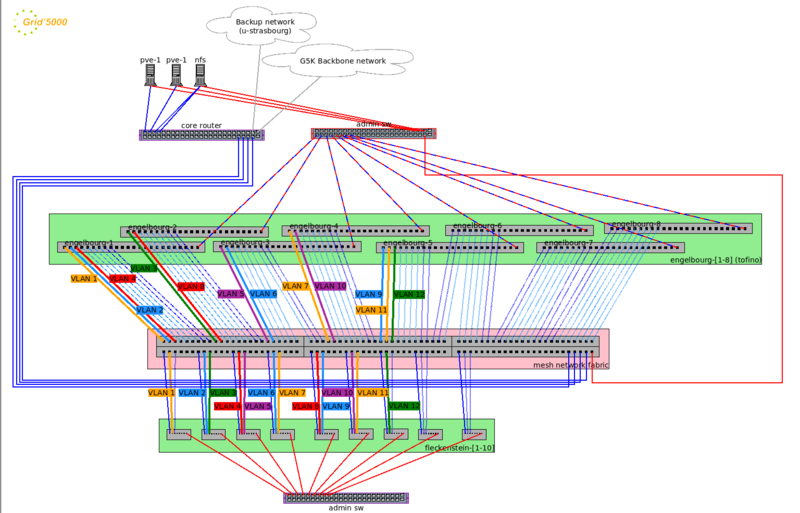

Below, on the left, is an example of emulated network topology. On the right, is the associated real network topology. | |||

[[File:G5k-strasbourg-network-ex3-2.png|400px|alt=example of emulated network topology]] | [[File:G5k-strasbourg-network-ex3-2.png|400px|alt=example of emulated network topology]] | ||

[[File:G5k-strasbourg-network-ex3-1.png|800px|real network topology]] | [[File:G5k-strasbourg-network-ex3-1.png|800px|real network topology]] | ||

Users can fully reinstall/reconfigure all equipment in the green boxes (fleckenstein server nodes and engelbourg network switch nodes). | Users can fully reinstall/reconfigure all experimental pieces of equipment in the green boxes (fleckenstein server nodes and engelbourg network switch nodes). | ||

Users can configure the VLAN topology of the mesh network switch (rose box) thanks to kavlan. | Users can configure the VLAN topology of the mesh network switch (rose box) thanks to kavlan. | ||

| Line 16: | Line 18: | ||

Experiments may involve edge devices such as the [[jetson|Jetsons nodes of the estats cluster]] and other nodes. For instance, to study the edge-to-cloud continuum, e.g.: federated learning. Interconnection between nodes may be emulated, adding network latency/failures between the network/edge equipments. | Experiments may involve edge devices such as the [[jetson|Jetsons nodes of the estats cluster]] and other nodes. For instance, to study the edge-to-cloud continuum, e.g.: federated learning. Interconnection between nodes may be emulated, adding network latency/failures between the network/edge equipments. | ||

This can be achieved using kavlan to interleave nodes in charge of adding latency/failures | This can be achieved using kavlan to interleave nodes in charge of adding latency/failures between edge devices (which in reality are in the same 1 rack U chassis in Toulouse). A tool such as [https://distem.gitlabpages.inria.fr/index.html distem] may be used to emulate latency/failures. | ||

== Instrumentation of the NOS == | == Instrumentation of the NOS == | ||

Allowing to change the OS of network equipment is a must-have feature for network experimenters. | |||

Thus the goal is to let users master the Network Operating System of switches, just like already possible on experimentation servers (with kadeploy). | Thus the goal is to let users master the Network Operating System of switches, just like already possible on experimentation servers (with kadeploy). | ||

Revision as of 17:55, 12 February 2025

This document summarizes the work in progress to allow network experimentation: from core networks to edge devices.

Core network topology emulation

Goal: Let users design topologies of interconnection between core network experimentation switches and other server nodes.

Thanks to an infrastructure ("mesh") switch, on-demand network links can be created between experimentation switches and server nodes.

Below, on the left, is an example of emulated network topology. On the right, is the associated real network topology.

Users can fully reinstall/reconfigure all experimental pieces of equipment in the green boxes (fleckenstein server nodes and engelbourg network switch nodes).

Users can configure the VLAN topology of the mesh network switch (rose box) thanks to kavlan.

Edge to cloud network emulation

Experiments may involve edge devices such as the Jetsons nodes of the estats cluster and other nodes. For instance, to study the edge-to-cloud continuum, e.g.: federated learning. Interconnection between nodes may be emulated, adding network latency/failures between the network/edge equipments.

This can be achieved using kavlan to interleave nodes in charge of adding latency/failures between edge devices (which in reality are in the same 1 rack U chassis in Toulouse). A tool such as distem may be used to emulate latency/failures.

Instrumentation of the NOS

Allowing to change the OS of network equipment is a must-have feature for network experimenters.

Thus the goal is to let users master the Network Operating System of switches, just like already possible on experimentation servers (with kadeploy).

- Nowadays, switches (white box switches) run "linux-based" operating systems (e.g., OpenSwitch, Sonic, Cumulus), which are derivatives of classical GNU/Linux server distributions (e.g., Debian).

- From the hardware viewpoint, they are very server-alike:

- x86 CPU

- Hard drive (SSD)

- Management NIC is just a classical CPU-attached NIC

- They have a BMC (out-of-band power-on/off, serial console, monitoring, ...)

- The only special feature is that they encompass an ASIC for off-loading network functions (switching, possibly routing, ...).

- That ASIC requires a dedicated Linux driver to enable offloading functions.

- That ASIC can in fine be assimilated to an accelerator, just like GPUs or FPGAs installed in servers. Thus it can be managed like other accelerators, with no impact on the baremetal OS deployment.

In the end, the conclusion is that white-box switches can be managed like servers from the OS and hardware perspective (apart from the network cabling of course, discussed above).

That means that ONIE, which is the standard mechanism to install NOS can be replaced by another, more classical, server installation mechanism (typically based on PXE → typically kadeploy), at the price of converting ONIE NOS image to server installer compatible formats (E.g.: kadeploy environments), or of adding ONIE NOS image support in the server installer (E.g: kadeploy installer miniOS).

In conclusion, the P4 switches installed in Strasbourg could be managed just like any other SLICES-FR clusters, benefiting from all the powerful features provided by the SLICES-FR stack (bare-metal deployment tool with kadeploy, recipe-based OS image builder with kameleon), hardware reference repository, hardware sanity certification tool (g5k-check), system regression tests, ...).

Todo

- Additional higher-level support for experimenting with P4 programing?