Ceph: Difference between revisions

Jlelaurain (talk | contribs) No edit summary |

|||

| (10 intermediate revisions by 4 users not shown) | |||

| Line 5: | Line 5: | ||

{{Portal|Tutorial}} | {{Portal|Tutorial}} | ||

{{StorageHeader}} | {{StorageHeader}} | ||

{{ | {{Warning|text=[[Ceph]] is not maintained anymore.}} | ||

Ceph is a distributed object store and file system designed to provide excellent performance, reliability and scalability. - See more at: http://ceph.com/ | Ceph is a distributed object store and file system designed to provide excellent performance, reliability and scalability. - See more at: http://ceph.com/ | ||

| Line 13: | Line 13: | ||

The following documentation show the architecture: | The following documentation show the architecture: | ||

https://docs.ceph.com/en/latest/architecture/ | |||

== Grid'5000 Deployment == | == Grid'5000 Deployment == | ||

| Line 76: | Line 76: | ||

* n : One primary object + n-1 replicas (more security, less efficient for write operations) | * n : One primary object + n-1 replicas (more security, less efficient for write operations) | ||

{{Note|text=See | {{Note|text=See https://ceph.io/wp-content/uploads/2016/08/weil-thesis.pdf (page 130)}} | ||

You can edit the replication size by clicking on the replication size value. If the new value is greater than the old value, objects will automatically be replicated. Depending the amount of objects this operation will stress the Ceph cluster and take more or less time. | You can edit the replication size by clicking on the replication size value. If the new value is greater than the old value, objects will automatically be replicated. Depending the amount of objects this operation will stress the Ceph cluster and take more or less time. | ||

| Line 98: | Line 98: | ||

[global] | [global] | ||

mon host = ceph0,ceph1,ceph2 | mon host = ceph0,ceph1,ceph2 | ||

keyring = /home/ | keyring = /home/<login>/.ceph/ceph.client.<login>.keyring | ||

* For Rennes : | * For Rennes : | ||

| Line 104: | Line 104: | ||

[global] | [global] | ||

mon host = ceph0,ceph1,ceph2 | mon host = ceph0,ceph1,ceph2 | ||

keyring = /home/ | keyring = /home/<login>/.ceph/ceph.client.<login>.keyring | ||

Create a ceph keyring file <code class='file'>~/.ceph/ceph.client.</code><code class='replace'>$USER</code><code class='file'>.keyring</code> with your keyring : | Create a ceph keyring file <code class='file'>~/.ceph/ceph.client.</code><code class='replace'>$USER</code><code class='file'>.keyring</code> with your keyring : | ||

| Line 114: | Line 114: | ||

export CEPH_CONF=~/.ceph/config | export CEPH_CONF=~/.ceph/config | ||

export CEPH_ARGS="--id $USER" | export CEPH_ARGS="--id $USER" | ||

'''Note''' : maybe a good idea to export CEPH_CONF and CEPH_ARGS in your <code class='file'>~/.bashrc</code> or <code class='file'>~/.zshrc</code> file. | '''Note''' : maybe a good idea to export CEPH_CONF and CEPH_ARGS in your <code class='file'>~/.bashrc</code> or <code class='file'>~/.zshrc</code> file. | ||

| Line 183: | Line 182: | ||

=== From your application (C/C++, Python, Java, Ruby, PHP...) === | === From your application (C/C++, Python, Java, Ruby, PHP...) === | ||

'''See :''' | '''See :''' https://docs.ceph.com/en/latest/rados/api/librados-intro/?highlight=librados | ||

=== Rados benchmarks === | === Rados benchmarks === | ||

| Line 189: | Line 188: | ||

==== On a 1G ethernet client ==== | ==== On a 1G ethernet client ==== | ||

{{Term|location=frontend|cmd=<code class="command">oarsub</code> -I -p | {{Term|location=frontend|cmd=<code class="command">oarsub</code> -I -p parapluie}} | ||

===== Write (16 concurrent operations) ===== | ===== Write (16 concurrent operations) ===== | ||

| Line 241: | Line 240: | ||

==== On a 10G ethernet client ==== | ==== On a 10G ethernet client ==== | ||

{{Term|location=frontend|cmd=<code class="command">oarsub</code> -I -p | {{Term|location=frontend|cmd=<code class="command">oarsub</code> -I -p paranoia}} | ||

===== Write (16 concurrent operations) ===== | ===== Write (16 concurrent operations) ===== | ||

| Line 296: | Line 295: | ||

=== Create a Rados Block Device === | === Create a Rados Block Device === | ||

{{Term|location=frontend|cmd=<code class="command">rbd</code> --pool <code class="replace">jdoe_rbd</code> create <code class="replace"><rbd_name></code> --size <code class="replace"><MB></code> | {{Term|location=frontend|cmd=<code class="command">rbd</code> --pool <code class="replace">jdoe_rbd</code> create <code class="replace"><rbd_name></code> --size <code class="replace"><MB></code>}} | ||

{{Warning|text=Ceph server release is older than frontend. For the moment we have to force "--image-format=1". Which create the warning "rbd: image format 1 is deprecated" }} | {{Warning|text=Ceph server release is older than frontend. For the moment we have to force "--image-format=1". Which create the warning "rbd: image format 1 is deprecated" }} | ||

| Line 304: | Line 303: | ||

Exemple : | Exemple : | ||

$ rbd -p pmorillo_rbd create datas --size 4096 | $ rbd -p pmorillo_rbd create datas --size 4096 | ||

$ rbd -p pmorillo_rbd ls | $ rbd -p pmorillo_rbd ls | ||

datas | datas | ||

| Line 320: | Line 318: | ||

{{Term|location=frontend|cmd=<code class="command">oarsub</code> -I -t deploy}} | {{Term|location=frontend|cmd=<code class="command">oarsub</code> -I -t deploy}} | ||

{{Term|location=frontend|cmd=<code class="command">kadeploy3</code> -e | {{Term|location=frontend|cmd=<code class="command">kadeploy3</code> -e debian11-x64-base -f $OAR_NODEFILE -k}} | ||

{{Term|location=frontend|cmd=<code class="command">ssh</code> root@<code class="replace">deployed_node</code>}} | {{Term|location=frontend|cmd=<code class="command">ssh</code> root@<code class="replace">deployed_node</code>}} | ||

{{Term|location=node|cmd=<code class="command">apt | {{Term|location=node|cmd=<code class="command">apt</code> install ceph}} | ||

{{Term|location=node|cmd=<code class="command">modprobe</code> rbd}} | {{Term|location=node|cmd=<code class="command">modprobe</code> rbd}} | ||

{{Term|location=node|cmd=<code class="command">modinfo</code> rbd}} | {{Term|location=node|cmd=<code class="command">modinfo</code> rbd}} | ||

| Line 328: | Line 326: | ||

Create Ceph configuration file <code class="file">/etc/ceph/ceph.conf</code> : | Create Ceph configuration file <code class="file">/etc/ceph/ceph.conf</code> : | ||

[global] | [global] | ||

mon host = ceph0 | mon host = ceph0,ceph1,ceph2 | ||

Get CephX key from the frontend : | Get CephX key from the frontend : | ||

| Line 374: | Line 372: | ||

{{Term|location=frennes|cmd=<code class="command">oarsub</code> -I}} | {{Term|location=frennes|cmd=<code class="command">oarsub</code> -I}} | ||

{{Term|location=node|cmd=<code class="command">qemu-img</code> convert -f qcow2 -O raw /grid5000/virt-images/ | {{Term|location=node|cmd=<code class="command">qemu-img</code> convert -f qcow2 -O raw /grid5000/virt-images/debian11-x64-base.qcow2 rbd:<code class="replace">pool_name</code>/debian11:id=<code class="replace">jdoe</code>}} | ||

{{Term|location=node|cmd=<code class="command">rbd</code> --id <code class="replace">jdoe</code> --pool <code class="replace">pool_name</code> ls}} | {{Term|location=node|cmd=<code class="command">rbd</code> --id <code class="replace">jdoe</code> --pool <code class="replace">pool_name</code> ls}} | ||

debian11 | |||

=== Start KVM virtual machine from a Rados Block Device === | === Start KVM virtual machine from a Rados Block Device === | ||

{{Term|location=node|cmd=<code class="command">screen kvm</code> -m 1024 -drive format=raw,file=rbd:<code class="replace">pool_name</code>/ | {{Term|location=node|cmd=<code class="command">screen kvm</code> -m 1024 -drive format=raw,file=rbd:<code class="replace">pool_name</code>/debian11:id=<code class="replace">jdoe</code> -nographic}} | ||

Latest revision as of 17:55, 2 December 2024

| Warning | |

|---|---|

Ceph is not maintained anymore. | |

Ceph is a distributed object store and file system designed to provide excellent performance, reliability and scalability. - See more at: http://ceph.com/

This pages gives information with regards to the managed Ceph service provided by Grid'5000.

About Ceph

The following documentation show the architecture: https://docs.ceph.com/en/latest/architecture/

Grid'5000 Deployment

| Sites | Size | Configuration | Rados | RBD | CephFS | RadosGW |

|---|---|---|---|---|---|---|

| Rennes | ~ 9TB | 16 OSDs on 4 nodes | ||||

| Nantes | ~ 7TB | 12 OSDs on 4 nodes |

Configuration

Generate your key

In order to access to the object store you will need a Cephx key. See : https://api.grid5000.fr/sid/sites/rennes/storage/ceph/ui/

Click on Generate a Cephx Key. A new key is generated.

Your key is now available from the frontends :

[client.jdoe] key = AQBwknVUwAPAIRAACddyuVTuP37M55s2aVtPrg==

Note : Replace jdoe by your login and rennes by nantes if your experiment is at Nantes.

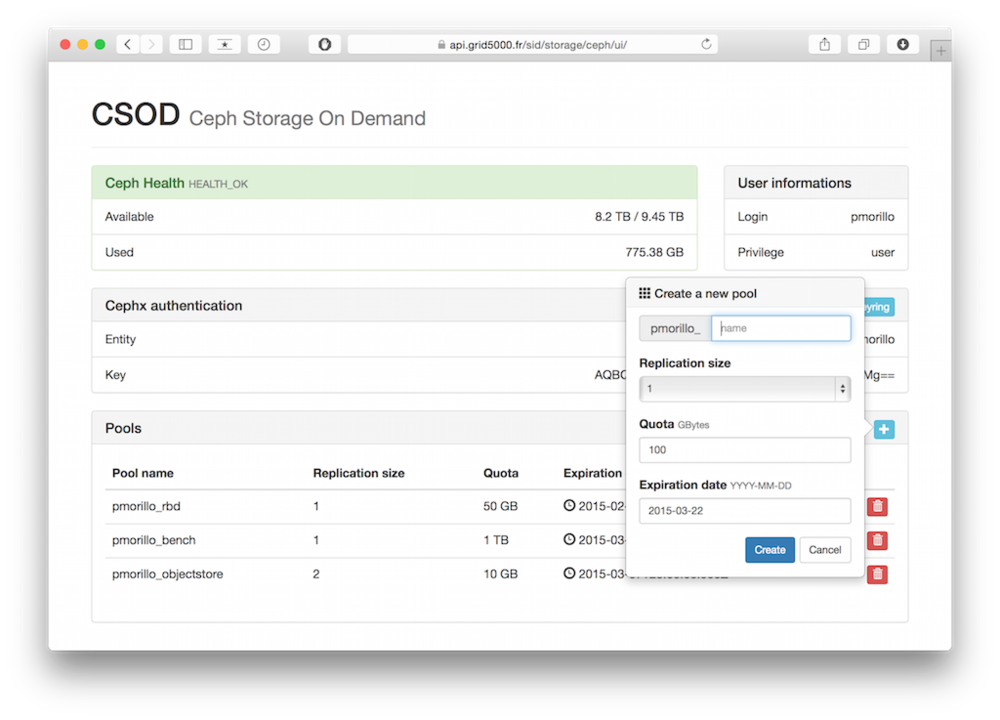

Create/Update/Delete Ceph pools

Requirement : Generate your key

Manage your Ceph pools from the Grid'5000 Ceph frontend :

- https://api.grid5000.fr/sid/sites/rennes/storage/ceph/ui/

- https://api.grid5000.fr/sid/sites/nantes/storage/ceph/ui/

Currently each users are limited to 4 pools.

Replication size

- 1 : No replication (not secured, most efficient for write operations, not recommended : one disk crash and all datas are lost)

- n : One primary object + n-1 replicas (more security, less efficient for write operations)

| Note | |

|---|---|

See https://ceph.io/wp-content/uploads/2016/08/weil-thesis.pdf (page 130) | |

You can edit the replication size by clicking on the replication size value. If the new value is greater than the old value, objects will automatically be replicated. Depending the amount of objects this operation will stress the Ceph cluster and take more or less time.

Quota

Quota is currently limited to 2TB per pools. You can change this quota at any time by clicking on the quota value, so prefer to use a small/realistic quota first.

Expiration date

The default expiration date is limited to 2 months. You can extend the expiration date at any time by clicking on the expiration date value.

Configure Ceph on clients

On the frontend or a node with standard environment

Create a ceph configuration file ~/.ceph/config :

- For Nantes :

[global] mon host = ceph0,ceph1,ceph2 keyring = /home/<login>/.ceph/ceph.client.<login>.keyring

- For Rennes :

[global] mon host = ceph0,ceph1,ceph2 keyring = /home/<login>/.ceph/ceph.client.<login>.keyring

Create a ceph keyring file ~/.ceph/ceph.client.$USER.keyring with your keyring :

frontend:

|

curl -k https://api.grid5000.fr/sid/sites/rennes/storage/ceph/auths/$USER.keyring > ~/.ceph/ceph.client.$USER.keyring |

Note : Replace rennes by nantes if your are at Nantes.

export CEPH_CONF=~/.ceph/config export CEPH_ARGS="--id $USER"

Note : maybe a good idea to export CEPH_CONF and CEPH_ARGS in your ~/.bashrc or ~/.zshrc file.

Test

$ ceph health HEALTH_OK $ ceph osd tree # id weight type name up/down reweight -1 8.608 root default -6 8.608 datacenter rennes -2 2.152 host ceph0 0 0.538 osd.0 up 1 1 0.538 osd.1 up 1 2 0.538 osd.2 up 1 3 0.538 osd.3 up 1 -3 2.152 host ceph1 4 0.538 osd.4 up 1 5 0.538 osd.5 up 1 6 0.538 osd.6 up 1 7 0.538 osd.7 up 1 -4 2.152 host ceph2 8 0.538 osd.8 up 1 9 0.538 osd.9 up 1 10 0.538 osd.10 up 1 11 0.538 osd.11 up 1 -5 2.152 host ceph3 12 0.538 osd.12 up 1 14 0.538 osd.14 up 1 15 0.538 osd.15 up 1 13 0.538 osd.13 up 1

Usage

Rados Object Store access

Requierement : Create a Ceph pool • Configure Ceph on client

From command line

Put an object into a pool

List objects of a pool

Get object from a pool

Remove an object

Usage informations

pool name category KB objects clones degraded unfound rd rd KB wr wr KB jdoe_objects - 1563027 2 0 0 0 0 0 628 2558455 total used 960300628 295991 total avail 7800655596 total space 9229804032

From your application (C/C++, Python, Java, Ruby, PHP...)

See : https://docs.ceph.com/en/latest/rados/api/librados-intro/?highlight=librados

Rados benchmarks

On a 1G ethernet client

Write (16 concurrent operations)

sec Cur ops started finished avg MB/s cur MB/s last lat avg lat

0 0 0 0 0 0 - 0

1 16 39 23 91.9669 92 0.91312 0.429879

2 16 70 54 107.973 124 0.679348 0.458774

3 16 96 80 106.644 104 0.117995 0.48914

4 16 126 110 109.98 120 0.207923 0.520003

5 16 154 138 110.38 112 0.370721 0.521431

6 16 180 164 109.314 104 0.293496 0.533345

...

Total time run: 60.321552

Total reads made: 1691

Read size: 4194304

Bandwidth (MB/sec): 112.132

Average Latency: 0.569955

Max latency: 4.06499

Min latency: 0.055024

Read

Maintaining 16 concurrent writes of 4194304 bytes for at least 60 seconds.

Object prefix: benchmark_data_parapluie-31.rennes.grid5000._25776

sec Cur ops started finished avg MB/s cur MB/s last lat avg lat

0 0 0 0 0 0 - 0

1 16 39 23 91.98 92 0.553582 0.434956

2 16 66 50 99.9807 108 0.471024 0.486303

3 16 95 79 105.314 116 0.654954 0.510875

4 16 124 108 107.981 116 0.522723 0.535851

5 16 151 135 107.981 108 0.244908 0.547624

6 16 180 164 109.314 116 0.239384 0.54942

...

Total time run: 60.478634

Total writes made: 1694

Write size: 4194304

Bandwidth (MB/sec): 112.040

Stddev Bandwidth: 15.9269

Max bandwidth (MB/sec): 124

Min bandwidth (MB/sec): 0

Average Latency: 0.571046

Stddev Latency: 0.354297

Max latency: 2.62603

Min latency: 0.165878

On a 10G ethernet client

Write (16 concurrent operations)

- Pool replication size : 1

Maintaining 16 concurrent writes of 4194304 bytes for at least 60 seconds.

Object prefix: benchmark_data_paranoia-3.rennes.grid5000.fr_5626

sec Cur ops started finished avg MB/s cur MB/s last lat avg lat

0 0 0 0 0 0 - 0

1 16 146 130 519.915 520 0.488794 0.103537

2 16 247 231 461.933 404 0.164705 0.126076

3 16 330 314 418.612 332 0.036563 0.148763

4 16 460 444 443.941 520 0.177378 0.141696

...

Total time run: 60.579488

Total writes made: 5965

Write size: 4194304

Bandwidth (MB/sec): 393.863

Stddev Bandwidth: 83.789

Max bandwidth (MB/sec): 520

Min bandwidth (MB/sec): 0

Average Latency: 0.162479

Stddev Latency: 0.195071

Max latency: 1.7959

Min latency: 0.033313

Read

sec Cur ops started finished avg MB/s cur MB/s last lat avg lat

0 0 0 0 0 0 - 0

1 16 288 272 1087.78 1088 0.028679 0.0556937

2 16 569 553 1105.82 1124 0.049299 0.0569641

3 16 850 834 1111.85 1124 0.024369 0.0566906

4 16 1127 1111 1110.86 1108 0.024855 0.0570628

5 16 1406 1390 1111.86 1116 0.182567 0.0568681

...

Total time run: 21.377795

Total reads made: 5965

Read size: 4194304

Bandwidth (MB/sec): 1116.111

Average Latency: 0.0573023

Max latency: 0.270447

Min latency: 0.011937

RBD (Rados Block Device)

Requierement : Create a Ceph pool • Configure Ceph on client

Create a Rados Block Device

| Warning | |

|---|---|

Ceph server release is older than frontend. For the moment we have to force "--image-format=1". Which create the warning "rbd: image format 1 is deprecated" | |

Exemple :

$ rbd -p pmorillo_rbd create datas --size 4096 $ rbd -p pmorillo_rbd ls datas $ rbd -p pmorillo_rbd info datas rbd image 'datas': size 4096 MB in 1024 objects order 22 (4096 kB objects) block_name_prefix: rb.0.604e.238e1f29 format: 1

Note : rbd -p pmorillo_rbd info datas is equivalent to rbd info pmorillo_rbd/datas

Create filesystem and mount RBD

Create Ceph configuration file /etc/ceph/ceph.conf :

[global] mon host = ceph0,ceph1,ceph2

Get CephX key from the frontend :

frennes $ curl -k https://api.grid5000.fr/sid/sites/rennes/storage/ceph/auths/$USER.keyring | ssh root@deployed_node "cat - > /etc/ceph/ceph.client.$USER.keyring"

Exemple :

rbd --id pmorillo map pmorillo_rbd/datas

Exemple :

rbd --id pmorillo showmapped id pool image snap device 0 pmorillo_rbd datas - /dev/rbd0

Filesystem Size Used Avail Use% Mounted on /dev/sda3 15G 1.6G 13G 11% / ... /dev/sda5 525G 70M 498G 1% /tmp /dev/rbd0 3.9G 8.0M 3.6G 1% /mnt/rbd

Note : To map rbd at boot time, use /etc/ceph/rbdmap file :

# RbdDevice Parameters #poolname/imagename id=client,keyring=/etc/ceph/ceph.client.keyring

Resize, snapshots, copy, etc...

See :

- http://ceph.com/docs/master/rbd/rados-rbd-cmds/

- http://ceph.com/docs/master/man/8/rbd/#examples

- http://ceph.com/docs/master/rbd/rbd-snapshot/

- rbd -h

QEMU/RBD

Requierement : Create a Ceph pool • Configure Ceph on client

Convert a qcow2 file into RBD

node:

|

qemu-img convert -f qcow2 -O raw /grid5000/virt-images/debian11-x64-base.qcow2 rbd:pool_name/debian11:id=jdoe |

debian11