Strasbourg Core-Network XP Infra: Difference between revisions

No edit summary |

|||

| (19 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{Portal|User}} | |||

The following describes the technical team's projects on the Grid'5000 site of Strasbourg, to allow core network experimentations. | |||

This is a work in progress. | |||

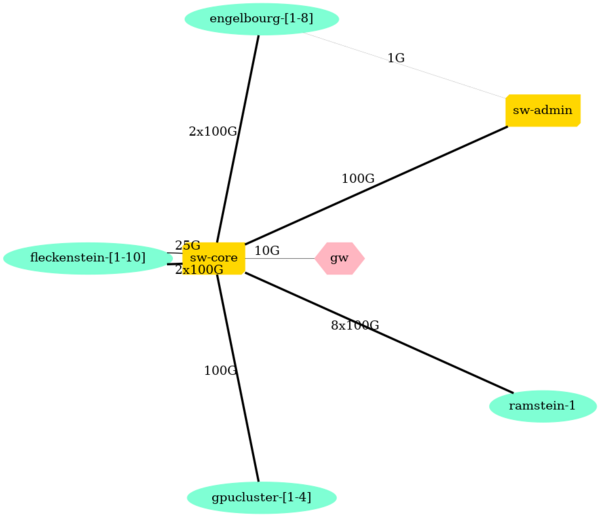

== Site physical topology == | |||

The Strasbourg site is made of nodes with numerous network interfaces: | |||

* experimentation servers | |||

** the '''fleckenstein''' cluster, composed of CPU servers | |||

** A yet to purchase and name '''GPU cluster''', composed of servers equipped with GPUs. | |||

* experimentation switches | |||

** the '''engelbourg''' cluster, composed of switches with Tofino ASICs | |||

** the '''ramstein''' cluster, composed of one switch with a Tofino2 ASIC | |||

The goal is to enable users to design complex and varied network topologies between servers and switches, thanks to: | |||

* numerous direct interconnections between nodes | |||

* an infrastructure switch (sw-core) that enables reconfigurable L2 interconnections | |||

[[File:StrasbourgNetwork without peer.png|thumbnail|center|600px]] | |||

[[File:CurrStrasbourgNetwork.png|thumbnail|center|700px]] | |||

== Tables of interconnections == | |||

=== Links to sw-core === | |||

All nodes of all clusters have several interfaces connected to the sw-core switch. This architecture enables users to reconfigure the network and create L2 links on demand, between all nodes (engelbourg, ramstein, fleckenstein, gpucluster) of the Strasbourg site. | |||

== | {| class="wikitable" | ||

! A !! Link || B | |||

|- | |||

| rowspan ="6" | sw-core || 3x100G || engelbourg-[1-8] | |||

|- | |||

| 8x100G || ramstein-1 | |||

|- | |||

| 2x100G || rowspan="2" | fleckenstein-[1-10] | |||

|- | |||

| 1x25G? | |||

|- | |||

| ? || GPU/SmartNICs cluster | |||

|- | |||

| 1x100G || gw (uplink) | |||

|} | |||

Open questions | |||

* We may connect the 25G interfaces of fleckenstein to gw? | |||

* In that case, 3x8 + 1x8 + 2x10 + 1 = 53 | |||

* If sw-core is 64x100G ports, 11x100G ports left for GPU or/and smartNICs (DPU, FPGA,...) cluster? | |||

* What about a 400G (with 100G breakout) sw-core switch? (SmartNICs can have 400G ports) | |||

* GPU / snartNICs cluster interco vs location in DC (not close to the current clusters?). | |||

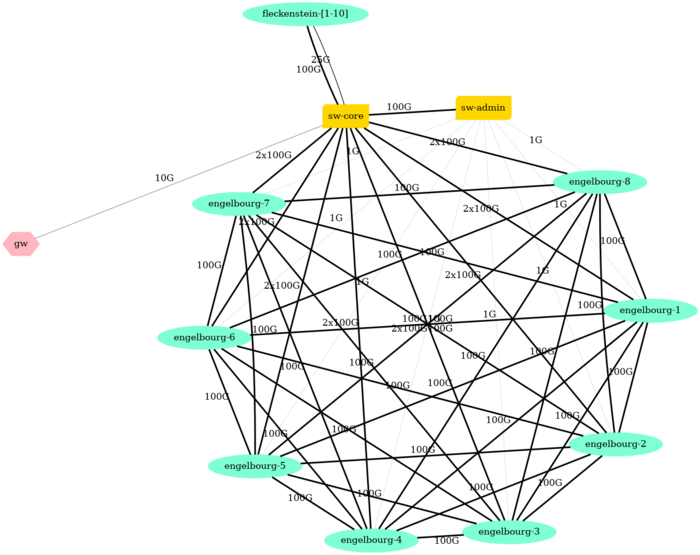

=== Node-to-node direct interconnections === | |||

In addition to the configurable links to sw-core, direct interconnections between the nodes are described in the table below. | |||

{| class="wikitable" | |||

! A !! Link !! B | |||

|- | |||

| engelbourg-[1-8] || 2x100G || engelbourg-[1-8] | |||

|- | |||

| ramstein-1 || 2x100G || engelbourg-[1-8] | |||

|- | |||

| fleckenstein-[1-5] || 4x25G || engelbourg-[1-4] | |||

|- | |||

| fleckenstein-[6-10] || 4x25G || engelbourg-[5-8] | |||

|} | |||

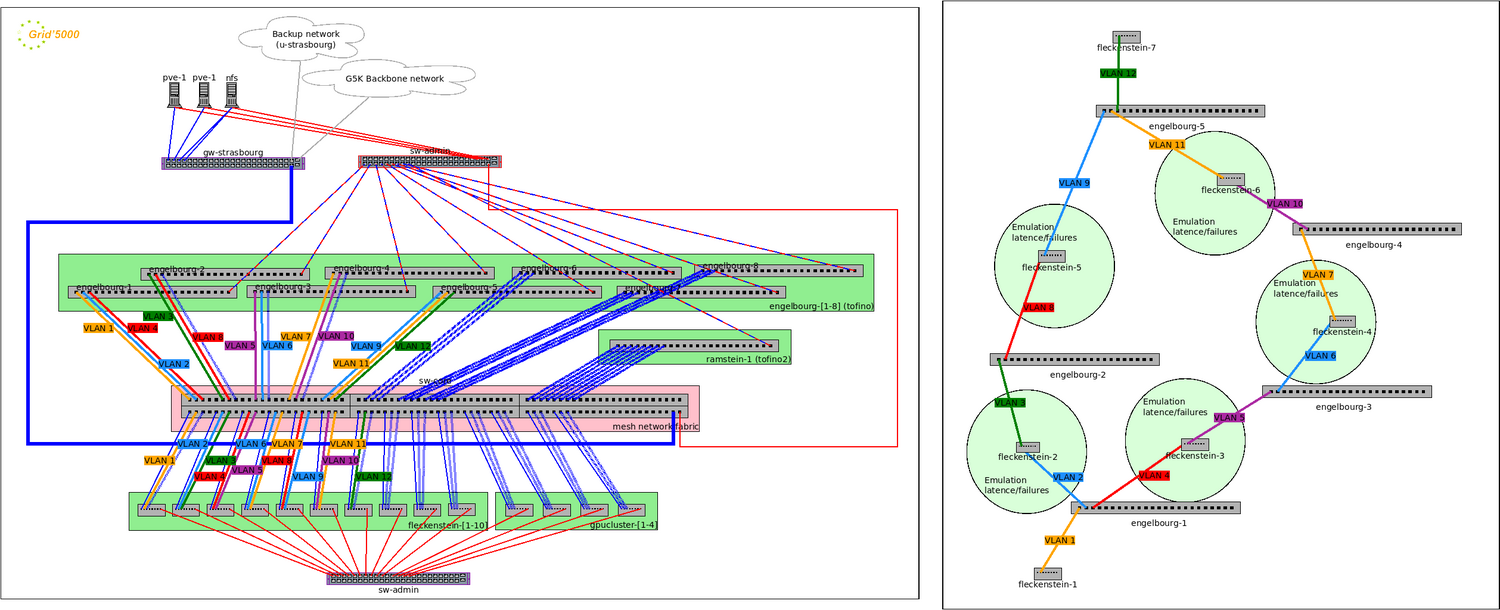

== Testbed topology emulation == | |||

The goal is to let users design topologies of interconnection between the core network experimentation switches and the server nodes. | |||

The figure below on the right shows an example of an emulated network topology. | |||

On the left, the figure shows the associated real network topology. | |||

[[File:G5k-strasbourg-network-v2.png|1500px]] | |||

Users will be able to fully reinstall and reconfigure all experimental switches and nodes in the green boxes, thanks to Kadeploy. | |||

Users will be able to configure VLANs on the sw-core switch (rose box) thanks to KaVLAN, thus creating the L2 links between the experimental equipment. | |||

A tool such as [https://distem.gitlabpages.inria.fr/ distem] may be installed on servers to emulate latency/failures. | |||

== Instrumentation of the NOS == | == Instrumentation of the NOS == | ||

The goal of the project is to allow users to master the Operating System of network switches, just like already possible on experimentation servers. | |||

With the arrival of "white box switches" on the market, the following assumptions can be taken: | |||

* Nowadays, switches | * Nowadays, many switches actually run a "GNU/Linux-based" operating system (e.g., OpenSwitch, Sonic, Cumulus), which is a derivative of general-purpose server distributions (e.g., Debian). | ||

* From the hardware viewpoint, they are very server- | * From the hardware viewpoint, they are very server-like, because assembled from standard commodity parts. For example, the engelbourg experimental switches (Edge-core Wedge100BF) have the following specs: | ||

** x86 CPU | ** x86 CPU | ||

** Hard drive (SSD) | ** Hard-drive (SSD) | ||

** Management NIC is just a classical CPU-attached NIC | ** Management NIC is just a classical CPU-attached NIC | ||

** They have a BMC (out-of-band power-on/off, serial console, monitoring, ...) | ** They have a BMC (out-of-band power-on/off, serial console, monitoring, ...) | ||

* | * Of course, they also feature an ASIC (e.g., Tofino) for the offload of network functions: | ||

** That ASIC requires a dedicated Linux driver | ** That ASIC requires a dedicated Linux kernel and/or driver. | ||

** | ** But it can be assimilated to any accelerator, just like GPUs or FPGAs installed in servers. Thus, it can be managed like accelerator cards, with no impact on the bare-metal OS deployment mechanism. | ||

The conclusion is that a cluster of white-box switches can be managed like a cluster of servers from the operating system and hardware perspective (apart from the network cabling, of course, discussed above). | |||

In particular, that means that while ONIE is nowadays the standard mechanism to install NOS, it is replaceable by other server installation mechanisms, which allow more flexibility for experimentation (frequent reinstallation, customization, configuration traceability). | |||

Grid'5000 uses the Kadeploy software to play that role on any hardware of the platform. Work is in progress to allow users to "kadeploy" on-the-shelf NOS images in the ONIE format, thanks to one of the two options: | |||

* using an image converter (E.g, a Kameleon recipe) allowing to create kadeploy environments from ONIE images. | |||

* Adding the ONIE NOS image support in the Kadeploy installer miniOS. | |||

Right now, it is already possible to deploy a vanilla Debian with Intel P4studio software suite and do some experiments on the Tofino ASIC. | |||

In conclusion, the engelbourg cluster of experimental switches will be managed just like the other Grid'5000 clusters of servers, benefiting from all the powerful features provided by the Grid'5000 stack (resource reservation, bare-metal parallel deployment tool, recipe-based OS image builder, hardware reference repository, hardware sanity certification, system regression tests, and so on). | |||

== Wattmetres == | |||

All nodes will be equipped with 0.1W/100Hz Watt meters, instrumented in [[Monitoring_Using_Kwollect|Kwollect]]. | |||

== | == References == | ||

* | === Taxonomy of the NICs : smartnics, DPU, FPGA... === | ||

* [https://www.fs.com/eu-en/blog/fs-smartnic-solutions-understanding-asic-fpga-and-dpu-architectures-26648.html FS SmartNIC Solutions: Understanding ASIC, FPGA, and DPU Architectures (2025)] | |||

* [https://arxiv.org/html/2504.03653v1 A Survey on Heterogeneous Computing Using SmartNICs and Emerging Data Processing Units (2025)] | |||

* [https://arxiv.org/html/2405.09499v1 A Comprehensive Survey on SmartNICs: Architectures, Development Models, Applications, and Research Directions (2024)] | |||

* [https://www.intel.in/content/dam/www/central-libraries/xa/en/documents/2023-12/soc-track-smart-nics-1-.pdf Smart NICs – Evolution and Future Trends (2023)] | |||

* [https://cloudswit.ch/blogs/the-most-complete-dpu-smartnic-vendors-with-its-product-line-summary/ The Most Comprehensive DPU/SmartNIC Vendors with its Product Line Summary (2022)] | |||

* [https://www.servethehome.com/dpu-vs-smartnic-sth-nic-continuum-framework-for-discussing-nic-types/ DPU vs SmartNIC and the STH NIC Continuum Framework (2021)] | |||

Latest revision as of 15:06, 14 October 2025

The following describes the technical team's projects on the Grid'5000 site of Strasbourg, to allow core network experimentations.

This is a work in progress.

Site physical topology

The Strasbourg site is made of nodes with numerous network interfaces:

- experimentation servers

- the fleckenstein cluster, composed of CPU servers

- A yet to purchase and name GPU cluster, composed of servers equipped with GPUs.

- experimentation switches

- the engelbourg cluster, composed of switches with Tofino ASICs

- the ramstein cluster, composed of one switch with a Tofino2 ASIC

The goal is to enable users to design complex and varied network topologies between servers and switches, thanks to:

- numerous direct interconnections between nodes

- an infrastructure switch (sw-core) that enables reconfigurable L2 interconnections

Tables of interconnections

Links to sw-core

All nodes of all clusters have several interfaces connected to the sw-core switch. This architecture enables users to reconfigure the network and create L2 links on demand, between all nodes (engelbourg, ramstein, fleckenstein, gpucluster) of the Strasbourg site.

| A | Link | B |

|---|---|---|

| sw-core | 3x100G | engelbourg-[1-8] |

| 8x100G | ramstein-1 | |

| 2x100G | fleckenstein-[1-10] | |

| 1x25G? | ||

| ? | GPU/SmartNICs cluster | |

| 1x100G | gw (uplink) |

Open questions

- We may connect the 25G interfaces of fleckenstein to gw?

- In that case, 3x8 + 1x8 + 2x10 + 1 = 53

- If sw-core is 64x100G ports, 11x100G ports left for GPU or/and smartNICs (DPU, FPGA,...) cluster?

- What about a 400G (with 100G breakout) sw-core switch? (SmartNICs can have 400G ports)

- GPU / snartNICs cluster interco vs location in DC (not close to the current clusters?).

Node-to-node direct interconnections

In addition to the configurable links to sw-core, direct interconnections between the nodes are described in the table below.

| A | Link | B |

|---|---|---|

| engelbourg-[1-8] | 2x100G | engelbourg-[1-8] |

| ramstein-1 | 2x100G | engelbourg-[1-8] |

| fleckenstein-[1-5] | 4x25G | engelbourg-[1-4] |

| fleckenstein-[6-10] | 4x25G | engelbourg-[5-8] |

Testbed topology emulation

The goal is to let users design topologies of interconnection between the core network experimentation switches and the server nodes.

The figure below on the right shows an example of an emulated network topology.

On the left, the figure shows the associated real network topology.

Users will be able to fully reinstall and reconfigure all experimental switches and nodes in the green boxes, thanks to Kadeploy.

Users will be able to configure VLANs on the sw-core switch (rose box) thanks to KaVLAN, thus creating the L2 links between the experimental equipment.

A tool such as distem may be installed on servers to emulate latency/failures.

Instrumentation of the NOS

The goal of the project is to allow users to master the Operating System of network switches, just like already possible on experimentation servers.

With the arrival of "white box switches" on the market, the following assumptions can be taken:

- Nowadays, many switches actually run a "GNU/Linux-based" operating system (e.g., OpenSwitch, Sonic, Cumulus), which is a derivative of general-purpose server distributions (e.g., Debian).

- From the hardware viewpoint, they are very server-like, because assembled from standard commodity parts. For example, the engelbourg experimental switches (Edge-core Wedge100BF) have the following specs:

- x86 CPU

- Hard-drive (SSD)

- Management NIC is just a classical CPU-attached NIC

- They have a BMC (out-of-band power-on/off, serial console, monitoring, ...)

- Of course, they also feature an ASIC (e.g., Tofino) for the offload of network functions:

- That ASIC requires a dedicated Linux kernel and/or driver.

- But it can be assimilated to any accelerator, just like GPUs or FPGAs installed in servers. Thus, it can be managed like accelerator cards, with no impact on the bare-metal OS deployment mechanism.

The conclusion is that a cluster of white-box switches can be managed like a cluster of servers from the operating system and hardware perspective (apart from the network cabling, of course, discussed above).

In particular, that means that while ONIE is nowadays the standard mechanism to install NOS, it is replaceable by other server installation mechanisms, which allow more flexibility for experimentation (frequent reinstallation, customization, configuration traceability).

Grid'5000 uses the Kadeploy software to play that role on any hardware of the platform. Work is in progress to allow users to "kadeploy" on-the-shelf NOS images in the ONIE format, thanks to one of the two options:

- using an image converter (E.g, a Kameleon recipe) allowing to create kadeploy environments from ONIE images.

- Adding the ONIE NOS image support in the Kadeploy installer miniOS.

Right now, it is already possible to deploy a vanilla Debian with Intel P4studio software suite and do some experiments on the Tofino ASIC.

In conclusion, the engelbourg cluster of experimental switches will be managed just like the other Grid'5000 clusters of servers, benefiting from all the powerful features provided by the Grid'5000 stack (resource reservation, bare-metal parallel deployment tool, recipe-based OS image builder, hardware reference repository, hardware sanity certification, system regression tests, and so on).

Wattmetres

All nodes will be equipped with 0.1W/100Hz Watt meters, instrumented in Kwollect.

References

Taxonomy of the NICs : smartnics, DPU, FPGA...

- FS SmartNIC Solutions: Understanding ASIC, FPGA, and DPU Architectures (2025)

- A Survey on Heterogeneous Computing Using SmartNICs and Emerging Data Processing Units (2025)

- A Comprehensive Survey on SmartNICs: Architectures, Development Models, Applications, and Research Directions (2024)

- Smart NICs – Evolution and Future Trends (2023)

- The Most Comprehensive DPU/SmartNIC Vendors with its Product Line Summary (2022)

- DPU vs SmartNIC and the STH NIC Continuum Framework (2021)