FPGA

| Note | |

|---|---|

This page is actively maintained by the Grid'5000 team. If you encounter problems, please report them (see the Support page). Additionally, as it is a wiki page, you are free to make minor corrections yourself if needed. If you would like to suggest a more fundamental change, please contact the Grid'5000 team. | |

As of August 2022, Grid'5000 features 2 nodes, each equipped with one AMD/Xilinx FPGA. This document gives specific information related to how those FPGA are usable in Grid'5000.

Hardware installation description

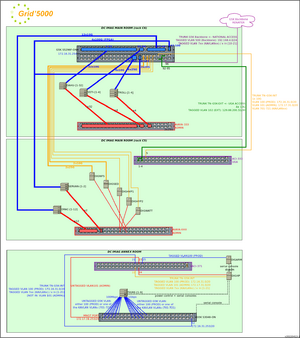

The Grenoble site of Grid'5000 hosts 2 servers (Servan cluster) equipped with an AMD/Xilinx FPGA plugged on PCIe. FPGA are AMD/Xilinx Alveo U200, referenced on the Xilinx catalog as Datacenter Accelerator cards.

Detailed specifications are provided here (cards with passive thermal cooling).

Technically, the installation of those FPGAs in the Servan nodes has the following characteristics:

- JTAG

- JTAG programming is provided on Xilinx Alveo U200 via a USB port on the card. In the Grid'5000 installation, this JTAG port is connected to a USB port of the hosting machine itself. Thus, the JTAG programming the FPGA hosted in servan-1 (resp. servan-2) can be done (e.g. with Vivado) from servan-1 (resp. servan-2) itself.

- Ethernet ports

- Both Ethernet ports of each FPGA are connected to the site network along with all servers of the site.

- The FPGA ethernet ports are not shown as NICs in the operating system of the hosting machine (unless FPGA is programmed as to do so).

- Ports are connected to 100Gbps ports on the Grenoble site router. The router ports are configured with Auto-Negotiation disabled and Speed forced to 100Gbps (not working otherwise, as far as we tested).

- Kavlan can reconfigure the FPGA Ethernet ports just like any NIC of a server of the site (including the servan servers' own NICs). FPGA ports are named servan-1-fpga0, servan-1-fpga1, servan-2-fpga0, servan-2-fpga1 in kavlan. IP addresses are provided via DHCP to the FPGA ports in kavlan where the DHCP service is available.

- Note: using the 100Gbps capability of the FPGA ports requires acquiring a free-of-charge Xilinx license.

- Wattmeter

- Each servan node energy consumption is measured by a wattmeter. Measures are available in Kwollect.

- Energy consumption can also be retrieved using Xilinx tools (e.g. xbutil, xbtop) from the host operating system when the FPGA is running XRT (see below), or through the CMS IP (https://www.xilinx.com/products/intellectual-property/cms-subsystem.html) if using a Vivado flow.

- Licenses

- FPGA software stack, IP, etc are subject to licenses (EULA to be signed, etc). See Xilinx FAQ. Grid'5000 does not provide licenses. It is left to the end-user to obtain the required licenses (some are free of charge).

Finally, as for any machine available in Grid'5000, the user can choose the operating system of the hosts (e.g. Centos or Ubuntu).

Using the FPGA

Programming

FPGA can be used in several ways:

- either using higher-level abstractions, e.g. using Xilinx's Vitis.

- or using lower-level abstractions, e.g. using Xilinx's Vivado.

When used with the higher level abstractions with Vitis, the FPGA card is managed by the XRT framework, and the card shows as a datacenter accelerator card. However, it is sometimes necessary to program the card at a lower level, for instance, to program it as a network card (NIC). In such a case, the card is fully reprogrammed, so that even its PCI id changes. Hence. depending on their need, users have to decide at what level they want to program the FPGA.

Regarding the programming of the operations of the FPGA (i.e. deploying a program on the FPGA) with Vivado, several options are also available:

- Via PCI-e.

- PCI-e programming may not be available as it requires the FPGA to possibly already operate PCI-e support for programming.

- Via JTAG, by flashing the program (using a .mcs file) on the embedded non-volatile memory of the PCI board, that lives beside the FPGA .

- Flashing the non-volatile memory requires a subsequent cold reboot of the hosting server to make the FPGA utilize the flashed program. It makes the programming persistent, which means flashing a factory golden image will be required to revert the FPGA back to its original operating mode.

- Via JTAG, by flashing directly in the FPGA's volatile memory (using a bitstream, .bit file).

- By programming the volatile memory, the FPGA will run the program straight away. A warm reboot may be required to make a program (e.g. if modifying the PCI-e) functional. A cold reboot will revert the FPGA to run the program installed in the non-volatile memory of the board.

As a result, it is strongly recommended to prefer programming the FPGA via JTAG in the VOLATILE memory, so that the new programming is NOT persistent, and the FPGA returns back to its default operating mode after a cold reboot, typically after the reservation/job.

FPGA software stack

Using supported system environments

The servan nodes just like all Grid'5000 nodes are running Debian stable by default. The AMD/Xilinx FPGA software stack is not available in that operating system environment.

AMD/Xilinx supports a limited list of OS to operate the FPGA, see here.

The AMD/Xilinx FPGA software stack can be installed on top of the technical team's supported Ubuntu (e.g. ubuntu2004-min) or Centos (e.g. centos8-min) environment after deploying it on the node with [[1]].

See https://www.xilinx.com/products/boards-and-kits/alveo/u200.html#gettingStarted.

User contributed Xilink software environment

A user-contributed Kadeploy environment named ubuntu2004-fpga and recorded in the Kadeploy registry in Pierre Neyron's userspace (pneyron) includes the Xilinx tools. Anyone may deploy it using the following command line in a OAR job of type deploy:

Because of the large disk space that they require (~50GB), Xilinx's Vitis and Vivado tools are however (pre-)installed separately on a shared NFS storage (mounted in /tools/ in the deployed ubuntu2004-fpga system). Those tools are subject to an end-user license agreement (EULA). Access to that shared NFS storage can be granted on request by contacting Pierre Neyron.

| Note | |

|---|---|

Please note that this ubuntu2004-fpga system environment is built using a kameleon recipe available in https://gitlab.inria.fr/neyron/ubuntu2004-fpga. You may modify the recipe and rebuild your own image, adapted to your need. An image on top of centos8-min could also be built. | |

| Note | |

|---|---|

Please note that the 2 servan nodes are shut-down by default (standby in the OAR terminology), which means that after reserving with | |

Some links

- Xilinx Developer program

- Some usages with Vitis

- Some projects making use of the Ethernet ports of the FPGAs

- Tutorials